2. Bernstein SL, Aronsky D, Duseja R, Epstein S, Handel D, Hwang U, et al. The effect of emergency department crowding on clinically oriented outcomes. Acad Emerg Med. 2009; 16(1):1–10. PMID:

19007346.

3. Stang AS, Crotts J, Johnson DW, Hartling L, Guttmann A. Crowding measures associated with the quality of emergency department care: a systematic review. Acad Emerg Med. 2015; 22(6):643–656. PMID:

25996053.

4. Park J, Lim T. Korean Triage and Acuity Scale (KTAS). J Korean Soc Emerg Med. 2017; 28(6):547–551.

5. Ryu JH, Min MK, Lee DS, Yeom SR, Lee SH, Wang IJ, et al. Changes in relative importance of the 5-level triage system, Korean Triage and Acuity Scale, for the disposition of emergency patients induced by forced reduction in its level number: a multi-center registry based retrospective cohort study. J Korean Med Sci. 2019; 34(14):e114. PMID:

30977315.

6. Kwon H, Kim YJ, Jo YH, Lee JH, Lee JH, Kim J, et al. The Korean Triage and Acuity Scale: associations with admission, disposition, mortality and length of stay in the emergency department. Int J Qual Health Care. 2019; 31(6):449–455. PMID:

30165654.

7. Park JB, Lee J, Kim YJ, Lee JH, Lim TH. Reliability of Korean Triage and Acuity Scale: interrater agreement between two experienced nurses by real-time triage and analysis of influencing factors to disagreement of triage levels. J Korean Med Sci. 2019; 34(28):e189. PMID:

31327176.

8. Moon SH, Shim JL, Park KS, Park CS. Triage accuracy and causes of mistriage using the Korean Triage and Acuity Scale. PLoS One. 2019; 14(9):e0216972. PMID:

31490937.

9. Kim JH, Kim JW, Kim SY, Hong DY, Park SO, Baek KJ, et al. Validation of the Korean Triage and Acuity Scale compare to triage by emergency severity index for emergency adult patient: preliminary study in a tertiary hospital emergency medical center. J Korean Soc Emerg Med. 2016; 27(5):436–441.

10. Kim JH, Hong JS, Park HJ. Prospects of deep learning for medical imaging. Precis Future Med. 2018; 2(2):37–52.

11. Hong WS, Haimovich AD, Taylor RA. Predicting hospital admission at emergency department triage using machine learning. PLoS One. 2018; 13(7):e0201016. PMID:

30028888.

12. Raita Y, Goto T, Faridi MK, Brown DF, Camargo CA Jr, Hasegawa K. Emergency department triage prediction of clinical outcomes using machine learning models. Crit Care. 2019; 23(1):64. PMID:

30795786.

13. Goto T, Camargo CA Jr, Faridi MK, Freishtat RJ, Hasegawa K. Machine learning based prediction of clinical outcomes for children during emergency department triage. JAMA Netw Open. 2019; 2(1):e186937. PMID:

30646206.

14. Patel SJ, Chamberlain DB, Chamberlain JM. A machine learning approach to predicting need for hospitalization for pediatric asthma exacerbation at the time of emergency department triage. Acad Emerg Med. 2018; 25(12):1463–1470. PMID:

30382605.

15. Choi SW, Ko T, Hong KJ, Kim KH. Machine learning-based prediction of Korean Triage and Acuity Scale level in emergency department patients. Healthc Inform Res. 2019; 25(4):305–312. PMID:

31777674.

16. Horng S, Sontag DA, Halpern Y, Jernite Y, Shapiro NI, Nathanson LA. Creating an automated trigger for sepsis clinical decision support at emergency department triage using machine learning. PLoS One. 2017; 12(4):e0174708. PMID:

28384212.

17. Lee KS, Song IS, Kim ES, Ahn KH. Determinants of spontaneous preterm labor and birth including gastroesophageal reflux disease and periodontitis. J Korean Med Sci. 2020; 35(14):e105. PMID:

32281316.

18. Lee KS, Ahn KH. Artificial neural network analysis of spontaneous preterm labor and birth and its major determinants. J Korean Med Sci. 2019; 34(16):e128. PMID:

31020816.

19. Fisher A, Rudin C, Dominici F. All models are wrong, but many are useful: learning a variable's importance by studying an entire class of prediction models simultaneously. J Mach Learn Res. 2019; 20(177):1–81.

20. Alabi RO, Elmusrati M, Sawazaki-Calone I, Kowalski LP, Haglund C, Coletta RD, et al. Comparison of supervised machine learning classification techniques in prediction of locoregional recurrences in early oral tongue cancer. Int J Med Inform. 2020; 136:104068. PMID:

31923822.

21. Shew M, New J, Bur AM. Machine learning to predict delays in adjuvant radiation following surgery for head and neck cancer. Otolaryngol Head Neck Surg. 2019; 160(6):1058–1064. PMID:

30691352.

22. Karadaghy OA, Shew M, New J, Bur AM. Development and assessment of a machine learning model to help predict survival among patients with oral squamous cell carcinoma. JAMA Otolaryngol Head Neck Surg. 2019; 145(12):1115–1120. PMID:

31045212.

23. Rajpurkar P, Yang J, Dass N, Vale V, Keller AS, Irvin J, et al. Evaluation of a machine learning model based on pretreatment symptoms and electroencephalographic features to predict outcomes of antidepressant treatment in adults with depression: a prespecified secondary analysis of a randomized clinical trial. JAMA Netw Open. 2020; 3(6):e206653. PMID:

32568399.

24. Lee KS. Research about chief complaint and principal diagnosis of patients who visited the university hospital emergency room. J Digit Converg. 2012; 10(10):347–352.

25. Dietterich TG. Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 1998; 10(7):1895–1923. PMID:

9744903.

26. Devlin J, Chang MW, Lee K, Toutanova K. BERT: pre-training of deep bidirectional transformers for language understanding. In : Proceedings of the 17th Annual Conference of the North American Chapter of the Association for Computational Linguistics; Stroudsburg, PA, USA: Association for Computational Linguistics;2019. p. 4171–4186.

27. Vaswani A, Noam S, Niki P, Uszkoreit J, Jone L, Gomez AN, et al. Ateention is all you need. In : Proceedings of the 31st International Conference on Neural Information Processing System; 2017. p. 6000–6010.

28. Moh'd A, Mesleh A. Chi square feature extraction based svms arabic language text categorization system. J Comput Sci. 2007; 3(6):430–435.

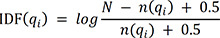

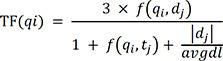

29. Whissell JS, Clarke CL. Improving document clustering using Okapi BM25 feature weighting. Inf Retr Boston. 2011; 14(5):466–487.

30. Lee YH, Lee SB. A research on enhancement of text categorization performance by using okapi BM25 word weight method. J Korea Acad Ind Coop Soc. 2010; 11(12):5089–5096.

31. Powers DMW. Evaluation: from precision, recall and F-measure to ROC, informedness, markedness & correlation. J Mach Learn Technol. 2011; 2(1):37–63.

32. Cortes C, Mohri M. Confidence intervals for the area under the ROC curve. In : Saul L, Weiss Y, Bottou L, editors. Advances in Neural Information Processing Systems 17 (NIPS 2004). Cambridge, MA, USA: MIT Press;2005. p. 305–312.

33. DeLong ER, DeLong DM, Clarke-Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics. 1988; 44(3):837–845. PMID:

3203132.

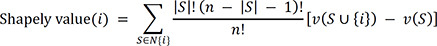

34. Shapley LS. A Value for n-Person Games. Contributions to the Theory of Games. Princeton, NJ, USA: Princeton University Press;1953.

35. Lundberg SM, Lee SI. A unified approach to interpreting model predictions. In : Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, editors. Advances in Neural Information Processing Systems 30 (NIPS 2017). Cambridge, MA, USA: MIT Press;2017. p. 4765–4774.

36. Van Der Walt S, Colbert SC, Varoquaux G. The NumPy array: a structure for efficient numerical computation. Comput Sci Eng. 2011; 13(2):22–30.

37. Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: machine learning in Python. J Mach Learn Res. 2011; 12:2825–2830.

38. Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, et al. PyTorch: an imperative style, high-performance deep learning library. arXiv. 2019. Forthcoming

https://arxiv.org/abs/1912.01703.

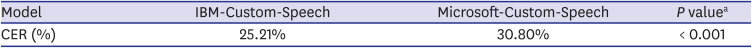

39. Yoo HJ, Seo S, Im SW, Gim GY. The performance evaluation of continuous speech recognition based on Korean phonological rules of cloud-based speech recognition open API. Int J Networked Distrib Comput. 2021; 9(1):10–18.

40. Hosmer JR, David W, Lemeshow S, Sturdivant RX. Applied Logistic Regression. 2nd ed. Hoboken, NJ, USA: John Wiley & Sons;2013.

41. Hinton GE, Osindero S, Teh YW. A fast learning algorithm for deep belief nets. Neural Comput. 2006; 18(7):1527–1554. PMID:

16764513.

42. Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014; 15(1):1929–1958.

43. Alom MZ, Taha TM, Yakopcic C, Westberg S, Sidike P, Nasrin MS, et al. A state-of-the-art survey on deep learning theory and architectures. Electronics (Basel). 2019; 8(3):292.

44. Sennrich R, Haddow B, Birch A. Neural machine translation of rare words with subword units. In : Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics; Stroudsburg, PA, USA: Association for Computational Linguistics;2016. (1715):p. 1725.

PDF

PDF Citation

Citation Print

Print

XML Download

XML Download