1. Lee JG, Jun S, Cho YW, Lee H, Kim GB, Seo JB, et al. Deep learning in medical imaging: general overview. Korean J Radiol. 2017; 18:570–584. PMID:

28670152.

2. Rudie JD, Rauschecker AM, Bryan RN, Davatzikos C, Mohan S. Emerging applications of artificial intelligence in neuro-oncology. Radiology. 2019; 290:607–618. PMID:

30667332.

3. Gevaert O, Mitchell LA, Achrol AS, Xu J, Echegaray S, Steinberg GK, et al. Glioblastoma multiforme: exploratory radiogenomic analysis by using quantitative image features. Radiology. 2014; 273:168–174. PMID:

24827998.

4. Eichinger P, Alberts E, Delbridge C, Trebeschi S, Valentinitsch A, Bette S, et al. Diffusion tensor image features predict IDH genotype in newly diagnosed WHO grade II/III gliomas. Sci Rep. 2017; 7:13396. PMID:

29042619.

5. Zhou H, Vallières M, Bai HX, Su C, Tang H, Oldridge D, et al. MRI features predict survival and molecular markers in diffuse lower-grade gliomas. Neuro Oncol. 2017; 19:862–870. PMID:

28339588.

6. Chang K, Bai HX, Zhou H, Su C, Bi WL, Agbodza E, et al. Residual convolutional neural network for the determination of IDH status in low-and high-grade gliomas from MR imaging. Clin Cancer Res. 2018; 24:1073–1081. PMID:

29167275.

7. Chang P, Grinband J, Weinberg BD, Bardis M, Khy M, Cadena G, et al. Deep-learning convolutional neural networks accurately classify genetic mutations in gliomas. AJNR Am J Neuroradiol. 2018; 39:1201–1207. PMID:

29748206.

8. Han L, Kamdar MR. MRI to MGMT: predicting methylation status in glioblastoma patients using convolutional recurrent neural networks. Pac Symp Biocomput. 2018; 23:331–342. PMID:

29218894.

9. Liang S, Zhang R, Liang D, Song T, Ai T, Xia C, et al. Multimodal 3D DenseNet for IDH genotype prediction in gliomas. Genes (Basel). 2018; 9:382.

10. Louis DN, Perry A, Reifenberger G, von Deimling A, Figarella-Branger D, Cavenee WK, et al. The 2016 World Health Organization classification of tumors of the central nervous system: a summary. Acta Neuropathol. 2016; 131:803–820. PMID:

27157931.

11. Gillies RJ, Kinahan PE, Hricak H. Radiomics: images are more than pictures, they are data. Radiology. 2016; 278:563–577. PMID:

26579733.

12. Kumar V, Gu Y, Basu S, Berglund A, Eschrich SA, Schabath MB, et al. Radiomics: the process and the challenges. Magn Reson Imaging. 2012; 30:1234–1248. PMID:

22898692.

13. Lambin P, Leijenaar RTH, Deist TM, Peerlings J, de Jong EEC, van Timmeren J, et al. Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol. 2017; 14:749–762. PMID:

28975929.

14. Kickingereder P, Bonekamp D, Nowosielski M, Kratz A, Sill M, Burth S, et al. Radiogenomics of glioblastoma: machine learning–based classification of molecular characteristics by using multiparametric and multiregional MR imaging features. Radiology. 2016; 281:907–918. PMID:

27636026.

15. Hu LS, Ning S, Eschbacher JM, Baxter LC, Gaw N, Ranjbar S, et al. Radiogenomics to characterize regional genetic heterogeneity in glioblastoma. Neuro Oncol. 2017; 19:128–137. PMID:

27502248.

16. Park JE, Kim HS, Park SY, Nam SJ, Chun SM, Jo Y, et al. Prediction of core signaling pathway by using diffusion-and perfusion-based MRI radiomics and next-generation sequencing in isocitrate dehydrogenase wild-type glioblastoma. Radiology. 2020; 294:388–397. PMID:

31845844.

17. Kniep HC, Madesta F, Schneider T, Hanning U, Schönfeld MH, Schön G, et al. Radiomics of brain MRI: utility in prediction of metastatic tumor type. Radiology. 2018; 290:479–487. PMID:

30526358.

18. Wang G, Wang B, Wang Z, Li W, Xiu J, Liu Z, et al. Radiomics signature of brain metastasis: prediction of EGFR mutation status. Eur Radiol. 2021; 31:4538–4547. PMID:

33439315.

19. Park CJ, Park YW, Ahn SS, Kim D, Kim EH, Kang SG, et al. Quality of radiomics research on brain metastasis: a roadmap to promote clinical translation. Korean J Radiol. 2022; 23:77–88. PMID:

34983096.

20. Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J, et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Trans Med Imaging. 2015; 34:1993–2024. PMID:

25494501.

21. Kickingereder P, Isensee F, Tursunova I, Petersen J, Neuberger U, Bonekamp D, et al. Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: a multicentre, retrospective study. Lancet Oncol. 2019; 20:728–740. PMID:

30952559.

22. Wen PY, Macdonald DR, Reardon DA, Cloughesy TF, Sorensen AG, Galanis E, et al. Updated response assessment criteria for high-grade gliomas: response assessment in neuro-oncology working group. J Clin Oncol. 2010; 28:1963–1972. PMID:

20231676.

23. Charron O, Lallement A, Jarnet D, Noblet V, Clavier JB, Meyer P. Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput Biol Med. 2018; 95:43–54. PMID:

29455079.

24. Kaufmann TJ, Smits M, Boxerman J, Huang R, Barboriak DP, Weller M, et al. Consensus recommendations for a standardized brain tumor imaging protocol for clinical trials in brain metastases. Neuro Oncol. 2020; 22:757–772. PMID:

32048719.

25. Liu Y, Stojadinovic S, Hrycushko B, Wardak Z, Lau S, Lu W, et al. A deep convolutional neural network-based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery. PLoS One. 2017; 12:e0185844. PMID:

28985229.

26. Zhou Z, Sanders JW, Johnson JM, Gule-Monroe MK, Chen MM, Briere TM, et al. Computer-aided detection of brain metastases in T1-weighted MRI for stereotactic radiosurgery using deep learning single-shot detectors. Radiology. 2020; 295:407–415. PMID:

32181729.

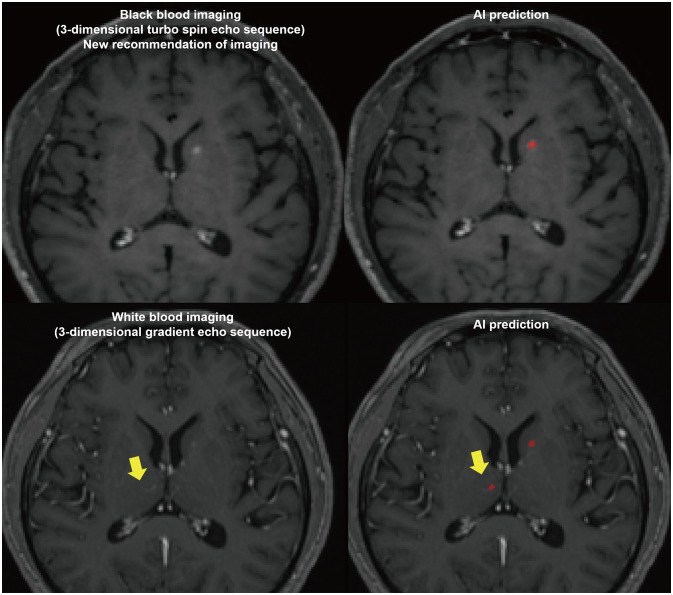

27. Jun Y, Eo T, Kim T, Shin H, Hwang D, Bae SH, et al. Deep-learned 3D black-blood imaging using automatic labelling technique and 3D convolutional neural networks for detecting metastatic brain tumors. Sci Rep. 2018; 8:9450. PMID:

29930257.

28. Park YW, Jun Y, Lee Y, Han K, An C, Ahn SS, et al. Robust performance of deep learning for automatic detection and segmentation of brain metastases using three-dimensional black-blood and three-dimensional gradient echo imaging. Eur Radiol. 2021; 31:6686–6695. PMID:

33738598.

29. Lebel RM. Performance characterization of a novel deep learning-based MR image reconstruction pipeline. arXiv [Preprint]. 2020; cited 2022 Jan 28. Available at: . DOI:

10.48550/arXiv.2008.06559.

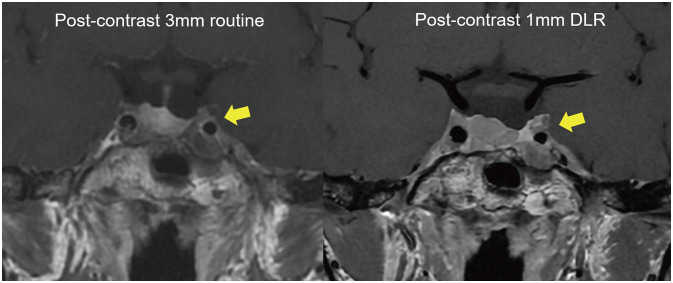

31. Kim M, Kim HS, Kim HJ, Park JE, Park SY, Kim YH, et al. Thin-slice pituitary MRI with deep learning-based reconstruction: diagnostic performance in a postoperative setting. Radiology. 2020; 298:114–122. PMID:

33141001.

32. Lee DH, Park JE, Nam YK, Lee J, Kim S, Kim YH, et al. Deep learning-based thin-section MRI reconstruction improves tumour detection and delineation in pre- and post-treatment pituitary adenoma. Sci Rep. 2021; 11:21302. PMID:

34716372.

33. Marcus G. Deep learning: a critical appraisal. arXiv [Preprint]. 2018; cited 2022 Jan 28. Available at: . DOI:

10.48550/arXiv.1801.00631.

34. Moreno-Barea FJ, Jerez JM, Franco L. Improving classification accuracy using data augmentation on small data sets. Expert Syst Appl. 2020; 161:113696.

35. Engstrom L, Tran B, Tsipras D, Schmidt L, Madry A. A rotation and a translation suffice: fooling CNNs with simple transformations. In : Proceedings of the 2019 International Conference on Learning Representations; 2019 May 6-9; New Orleans, LO. OepnReview.net;Accessed Jan 28, 2022. Available at:

https://openreview.net/forum?id=BJfvknCqFQ.

36. Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big Data. 2019; 6:60.

37. Dar SU, Yurt M, Karacan L, Erdem A, Erdem E, Cukur T. Image synthesis in multi-contrast MRI with conditional generative adversarial networks. IEEE Trans Med Imaging. 2019; 38:2375–2388. PMID:

30835216.

38. Yurt M, Dar SU, Erdem A, Erdem E, Oguz KK, Çukur T. mustGAN: multi-stream generative adversarial networks for MR image synthesis. Med Image Anal. 2021; 70:101944. PMID:

33690024.

39. Dar SUH, Yurt M, Shahdloo M, Ildız ME, Tınaz B, Çukur T. Prior-guided image reconstruction for accelerated multi-contrast MRI via generative adversarial networks. IEEE J Sel Top Signal Process. 2020; 14:1072–1087.

40. Park JE, Eun D, Kim HS, Lee DH, Jang RW, Kim N. Generative adversarial network for glioblastoma ensures morphologic variations and improves diagnostic model for isocitrate dehydrogenase mutant type. Sci Rep. 2021; 11:9912. PMID:

33972663.

41. Jayachandran Preetha C, Meredig H, Brugnara G, Mahmutoglu MA, Foltyn M, Isensee F, et al. Deep-learning-based synthesis of post-contrast T1-weighted MRI for tumour response assessment in neuro-oncology: a multicentre, retrospective cohort study. Lancet Digit Health. 2021; 3:e784–e794. PMID:

34688602.

42. Kim DW, Jang HY, Kim KW, Shin Y, Park SH. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: results from recently published papers. Korean J Radiol. 2019; 20:405–410. PMID:

30799571.

PDF

PDF Citation

Citation Print

Print

XML Download

XML Download