Abstract

Objectives

Methods

Results

Conclusion

ACKNOWLEDGMENTS

References

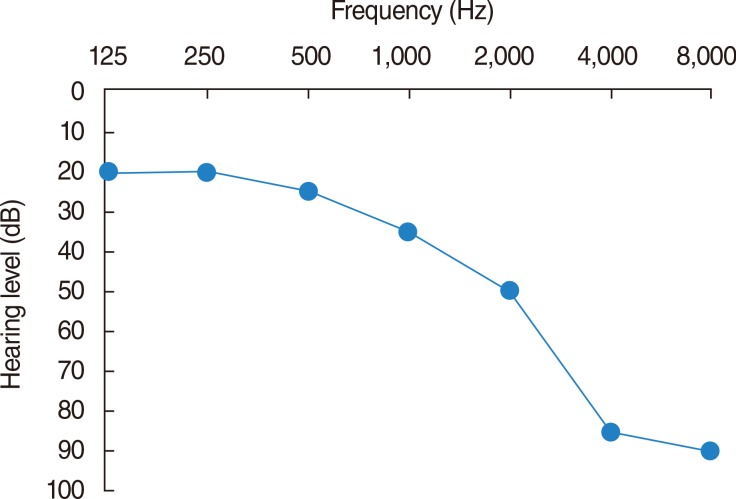

Fig. 1

Hearing threshold setting for both the HLS-1 and HLS-2 that simulates severe hearing loss in the high-frequency region. HLS, hearing loss simulator.

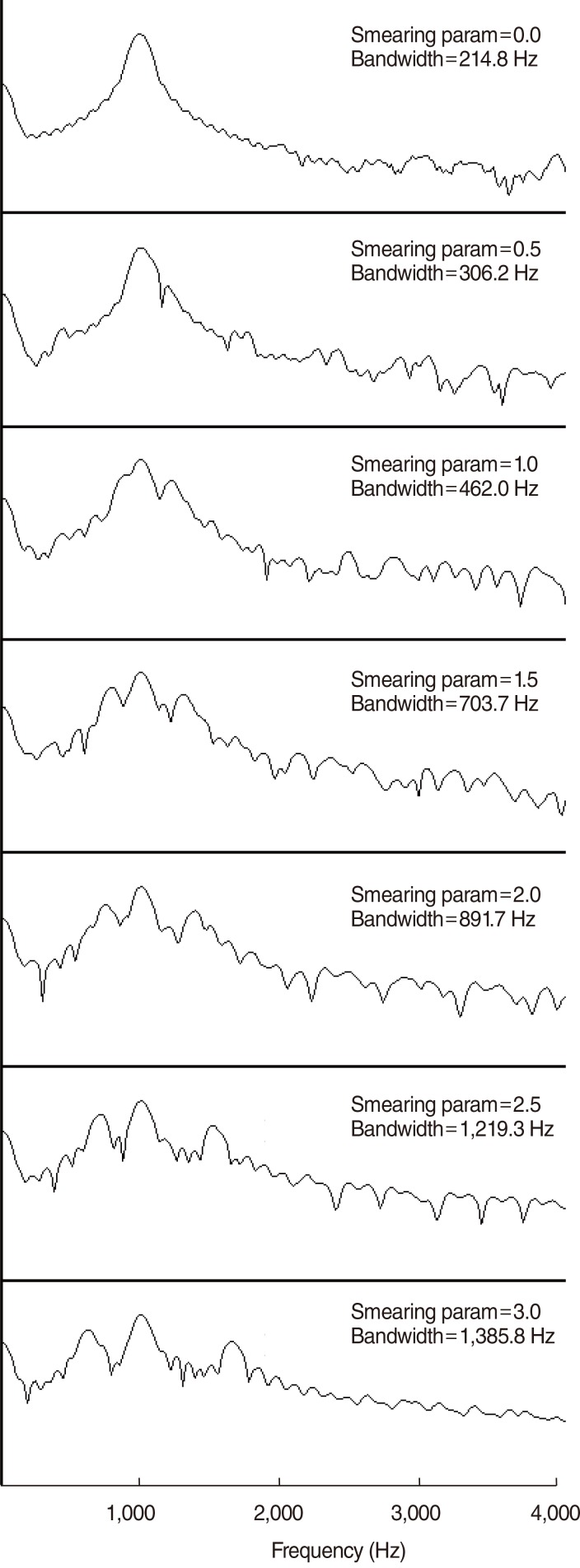

Fig. 2

Measurements of the HLS-2 bandwidth when a 1-kHz pure-tone sine wave was entered into HLS-2 and the values of the smearing parameter were adjusted from 0.0 to 3.0 at 0.5 intervals. HLS, hearing loss simulator.

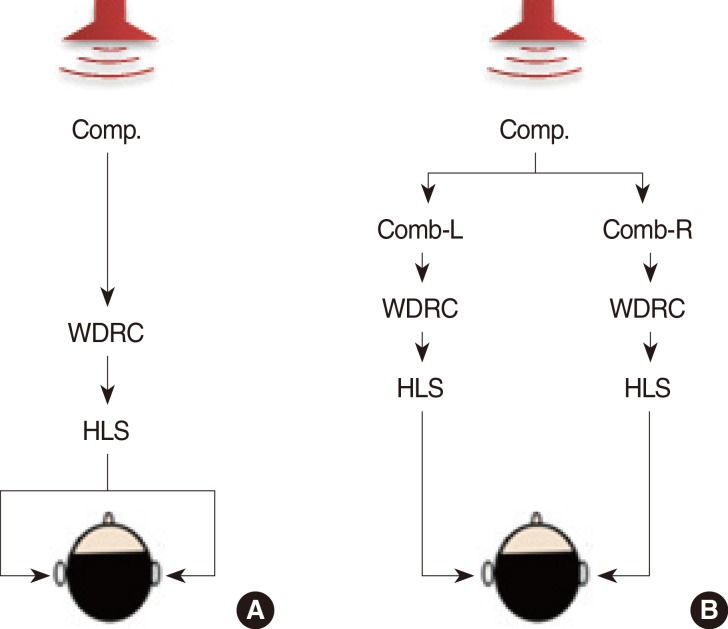

Fig. 3

Schematics of the combinations of testing algorithms: (A) TAC1. (B) TAC2. Comp., frequency compression; Comb-L, odd-band comb filter; Comb-R, even-band comb filter; WDRC, wide dynamic range compression; HLS, hearing loss simulator; TAC, testing algorithm combination.

Table 1.

The 82 consonant-vowel-consonant English words selected for word recognition testing

Table 2.

Measurements of the correction ratios (%) when HLS-1 was utilized

Values are presented as mean±standard deviation.

HLS, hearing loss simulator; ~th, words that end in th; ~s, words that end in s; ~f, words that end in f; s~, words that begin with s; f~, words that begin with f; front-f, words that begin with fricatives; rear-f, words that end in fricatives; fri-s, words with fricative s; fri-f, words with fricative-f; TAC, testing algorithm combination.

Table 3.

Measurements of the response time (second) when HLS-1 was utilized

Values are presented as mean±standard deviation.

HLS, hearing loss simulator; ~th, words that end in th; ~s, words that end in s; ~f, words that end in f; s~, words that begin with s; f~, words that begin with f; front-f, words that begin with fricatives; rear-f, words that end in fricatives; fri-s, words with fricative s; fri-f, words with fricative-f; TAC, testing algorithm combination.

Table 4.

Measurements of the correction ratios (%) when HLS-2 was utilized

Values are presented as mean±standard deviation.

HLS, hearing loss simulator; ~th, words that end in th; ~s, words that end in s; ~f, words that end in f; s~, words that begin with s; f~, words that begin with f; front-f, words that begin with fricatives; rear-f, words that end in fricatives; fri-s, words with fricative s; fri-f, words with fricative-f; TAC, testing algorithm combination.

Table 5.

Measurements of the response times (second) when HLS-2 was utilized

Values are presented as mean±standard deviation.

HLS, hearing loss simulator; ~th, words that end in th; ~s, words that end in s; ~f, words that end in f; s~, words that begin with s; f~, words that begin with f; front-f, words that begin with fricatives; rear-f, words that end in fricatives; fri-s, words with fricative s; fri-f, words with fricative-f; TAC, testing algorithm combination.

Table 6.

Comparison of correction ratios between HLS-1 and HLS-2

P-values in the Mann-Whitney test.

HLS, hearing loss simulator; ~th, words that end in th; ~s, words that end in s; ~f, words that end in f; s~, words that begin with s; f~, words that begin with f; front-f, words that begin with fricatives; rear-f, words that end in fricatives; fri-s, words with fricative s; fri-f, words with fricative-f; TAC, testing algorithm combination.

Table 7.

Comparison of response times between HLS-1 and HLS-2

P-values in the Mann-Whitney test.

HLS, hearing loss simulator; ~th, words that end in th; ~s, words that end in s; ~f, words that end in f; s~, words that begin with s; f~, words that begin with f; front-f, words that begin with fricatives; rear-f, words that end in fricatives; fri-s, words with fricative s; fri-f, words with fricative-f; TAC, testing algorithm combination.

PDF

PDF Citation

Citation Print

Print

XML Download

XML Download