Abstract

Background/Aims

Capsule endoscopy (CE) has become an important tool for the diagnosis of small bowel disease. Although CE does not require the skill of endoscope insertion, the images should be interpreted by a person with experience in assessing images of the gastrointestinal mucosa. This investigation aimed to document the number of cases needed by trainees to gain the necessary experience for CE competency.

Methods

Fifteen cases were distributed to 12 trainees with no previous experience of CE during their gastroenterology training as clinical fellows. Twelve trainees and an expert were asked to read CE images from one patient each week for 15 weeks. The diagnosis was reported using five categories (no abnormalities detected, small bowel erosion or ulcer, small bowel tumor, Crohn disease, and active small bowel bleeding with no identifiable source). We then examined, using the κ coefficient, how the degree of mean agreements between the trainees and the expert changed as the training progressed each week.

Go to :

Capsule endoscopy (CE) is a convenient method that eliminates the discomfort of endoscope insertion for the patient and provides valuable information for the physician.1-4 CE is a valuable screening diagnostic modality for the small bowel, which is otherwise difficult to access.1-5 However, the quality control of the CE procedure can be difficult to maintain if CE data are not interpreted by a qualified person. The American Society of Gastrointestinal Endoscopy recommends that interpretation of CE data be performed by a person familiar with the mucosa of the gastrointestinal (GI) tract and the diagnosis of small bowel disease.3 American and Korean societies of gastrointestinal endoscopy have recommended 25, 20, and 10 cases of CE as necessary to ensure competence in the interpretation of findings.2,3,6 However, actual data supporting the adequate numbers of cases have not been reported. We conducted this investigation in trainees to determine the number of cases required to gain competence in CE interpretation.

Go to :

In the training experiment, 12 trainees and an expert were asked to read CE images from one patient each week for 15 weeks. The 15 educational cases in this investigation were selected from four different university hospitals. The 15 enrolled case patients had adequate bowel preparation after fasting for 12 hours and consuming a liquid diet for 24 hours, or ingesting 2 L of polyethylene glycol.7-10 All CE images were acquired using Pillcam SB capsules from Given company (Israel). The expert was a faculty member of the division of gastroenterology with more than a decade of experience in wired endoscopy and wireless CE. Fourteen clinical fellows from the division of gastroenterology of different university hospitals voluntarily participated. Two clinical fellows dropped out because of their inability to interpret the CE images within the appointed period. The 12 remaining trainees completed the interpretation of 15 cases within the allotted time. All trainees had experiences of approximately 1,000 cases of wired endoscopy, including esophagogastroduodenoscopy and colonofiberscopy. Before the study, the trainees received education about the CE hardware and software. They simultaneously downloaded the same CE image from an Internet disc every week and reported their interpretation of each CE image through e-mail. The trainees had no knowledge of the other participants and were therefore unable to communicate with each other about the CE results. The clinical information of each case patient, including age, sex, and the reason for CE, was provided to the trainees. The result of interpretation included two components: small bowel transit time (minutes) and diagnosis. Small bowel diagnosis involved five categories: no abnormalities detected, small bowel erosion or ulcer, small bowel tumor, Crohn disease, and active small bowel bleeding with no identifiable source.

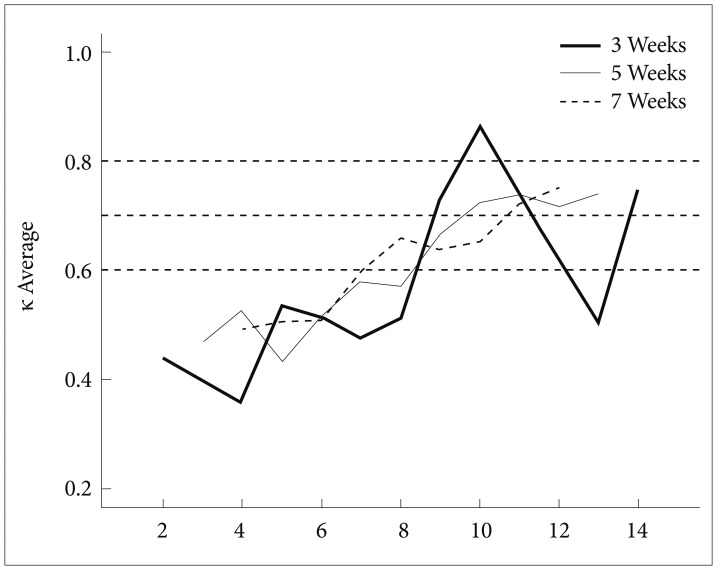

We examined how the degree of mean agreements between the trainees and the expert changed as the training experiment progressed each week, by using the κ coefficient, defined as K=[P(o)-P(e)]/[1-P(e)], where P(o) is the percentage of the observed agreement calculated on the basis of multiple ratings of two or more raters and P(e) is the probability of random agreement. If the raters perfectly agree, the κ coefficient (K)=1. If there is no agreement, K=0. In this study, it was inappropriate to use conventional multirater κ coefficients because they treat the trainees and the expert as equivalent raters.11 Also, as each rater (trainee or expert) conducted only one rating (reading the images from one patient) per week, we could not calculate κ coefficients properly with any given 1-week data. To overcome such problems, Cohen κ coefficients, Ks, were calculated with pooled data over a span of S weeks for each pair of one trainee and the expert.12 Within the 15-week period, there were 15-(S-1) spans. The mean, Ks, was calculated with the K values of all pairs for each week. For example, when the span size S is 3 weeks, the κ coefficient K3 of week W was calculated for each pair of one trainee and the expert with pooled data from week W-1, W, and W+1. Then, for each of the weeks 2, 3, …, and 14, K3 is calculated by averaging 12 K3's. The significant difference was checked by a p-value calculated using a two-sample test of two groups at each number of interpretations. All analyses were performed with SPSS version 12.0 for Windows (SPSS Inc., Chicago, IL, USA).

Go to :

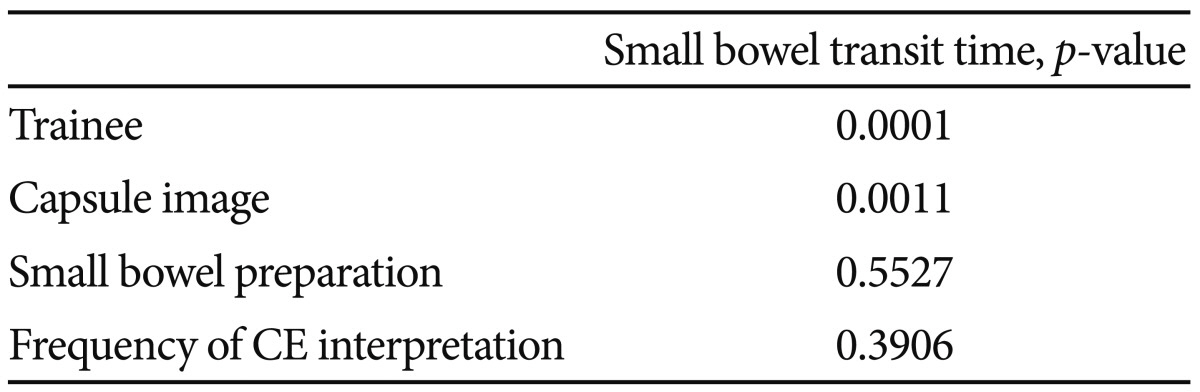

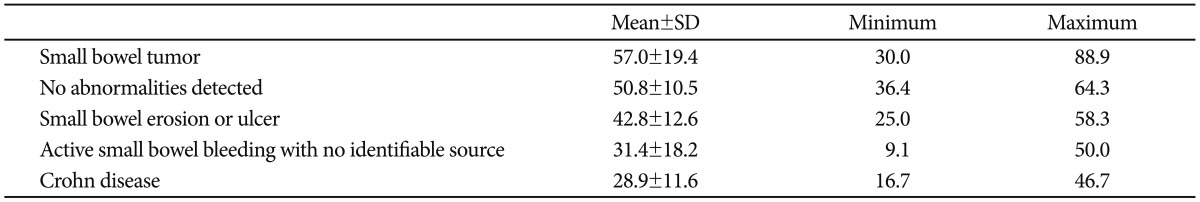

We determined the meaningful factors for a κ>0.5 between the expert and the trainees in the small bowel transit time and small bowel diagnosis. The agreement rate of the small bowel transit time was significantly associated with two variables: trainee (p=0.001) and capsule case (p=0.0011) (Table 1). The accuracy of the measurement of small bowel transit time depends on the trainee and the capsule image. If the capsule image failed to demonstrate the ileocecal valve and pylorus definitely, the measurement of small bowel transit time varied according to the trainee and capsule image. Small bowel tumors had maximum agreement, whereas Crohn disease had the lowest agreement between the trainees and the expert (Table 2). In diagnosing small bowel tumor, trainees had a mean agreement rate of 57% with the expert. They had a 29% agreement with the expert in diagnosing Crohn disease. Moreover, trainees tended to diagnose ulcer rather than Crohn disease owing to limited clinical information. Small bowel non-specific ulcers could not be discriminated as being induced by nonsteroidal anti-inflammatory drugs (NSAIDs) or Crohn disease solely through CE findings. The agreement rate of CE diagnosis increased as the frequencies of interpretation increased. Fig. 1 presents the K3, K5, and K7 values from our data. Regardless of the span sizes, most of the mean κ coefficients were >0.60 and >0.80 after week 9 and 11, respectively. The trainees demonstrated good agreement with the expert after 9 weeks and very good agreement after 11 weeks.

Go to :

A capsule endoscope is a self-contained system that transmits images of the GI tract to an external receiver. The system produces little discomfort of endoscope insertion as compared with wired endoscopy.1-5 CE has become an important tool for the diagnosis of small bowel disease.1-5 As CE can be performed without the skilled technique of an operator, it is easy to use imprudently; however, it is important to maintain the quality control of the CE process. The American Society of Gastrointestinal Endoscopy recommends the use of CE to be limited to practitioners who are already competent in the procedure, who have performed standard endoscopy, and who have extensive experience in viewing the GI mucosa.3,6 Required threshold numbers of procedures (>10 or >25) to assess competency have been proposed.3,6 However, experimental studies or evidence supporting these proposed numbers are lacking. We performed this investigation to know how many cases are needed to ensure competency in performing CE.

We measured two parameters: small bowel transit time and small bowel diagnosis. Small bowel transit time is determined using the landmarks of the pylorus and the ileocecal valve.13,14 Images of the pylorus and ileocecal valve cannot be captured by CE. At that time, the interpreter should consider the first image of the duodenal lumen as the first observation of the small bowel and that just before first finding fecal material in the large lumen as the last observation of the small bowel. The agreement of small bowel transit time between the expert and trainees was associated with each capsule image and the trainee's trait.

The agreement rate of small bowel diagnosis varied according to small bowel disease entities. Crohn disease could not be differentiated from NSAID-induced ulcer or erosion only through the CE image. Trainees had difficulties in diagnosing Crohn disease. Crohn disease should be diagnosed on the basis of both the capsule image and patient's clinical information (e.g., weight loss, diarrhea, unexplained abdominal pain, elevated C-reactive protein, hypoalbuminemia, and no recent NSAID use).15-19 Small bowel tumor is sometimes difficult to differentiate from extrinsic compression. Interpreters should judge a CE finding considering the clinical information of each patient during the diagnosis. Accordingly, CE data should be interpreted by a gastroenterologist who can diagnose small bowel disease and perform small bowel endoscopy.

The limitation of this experiment was the lack of sufficient education and continuous feedback under proper supervision because the trainees worked in different hospitals located in different remote districts. Interpreters of CE findings must understand in advance the indications, contraindications, and risks of the procedure, and be familiar with the hardware and software necessary to perform CE and interpret the findings.1-6 The interpreters should be capable of detecting and describing abnormal CE findings accurately with proper terminology, and to judge CE findings considering the clinical situation of each case.

We used the κ coefficient as a statistical measure. The κ coefficient is one of most common measures for evaluating interrater agreement. Landis and Kock20 suggested how to interpret κ coefficients by using five categories (<0.20, poor; 0.21 to 0.40, fair; 0.41 to 0.60, moderate; 0.61 to 0.80, good; >0.80, very good). In this investigation, most of the mean κ coefficients were >0.60 after week 9 and >0.80 after week 11, which indicates good agreement of the trainees with the expert after 9 weeks and very good agreement after 11 weeks.

As with other endoscopic procedures, trainees should receive proper endoscopic training programs and must be exposed to a sufficient number of endoscopic experiences. Although the performance of an arbitrary number of procedures does not guarantee competence, experience with approximately 10 cases of CE is appropriate for trainees to attain competency in CE.

Go to :

Acknowledgments

We deeply appreciate the participation of the 12 trainees in this study.

This work supported by the Gastrointestinal Endoscopy Research Foundation of Korea.

Go to :

References

1. Mishkin DS, Chuttani R, Croffie J, et al. ASGE Technology Status Evaluation Report: wireless capsule endoscopy. Gastrointest Endosc. 2006; 63:539–545. PMID: 16564850.

2. American Association for the Study of Liver Diseases. American College of Gastroenterology. American Gastroenterological Association (AGA) Institute. American Society for Gastrointestinal Endoscopy. The gastroenterology core curriculum, third edition. Gastroenterology. 2007; 132:2012–2018. PMID: 17484892.

3. Faigel DO, Baron TH, Adler DG, et al. ASGE guideline: guidelines for credentialing and granting privileges for capsule endoscopy. Gastrointest Endosc. 2005; 61:503–505. PMID: 15812400.

4. Varela Lema L, Ruano-Ravina A. Effectiveness and safety of capsule endoscopy in the diagnosis of small bowel diseases. J Clin Gastroenterol. 2008; 42:466–471. PMID: 18277887.

5. Rey JF, Ladas S, Alhassani A, Kuznetsov K. ESGE Guidelines Committee. European Society of Gastrointestinal Endoscopy (ESGE). Video capsule endoscopy: update to guidelines (May 2006). Endoscopy. 2006; 38:1047–1053. PMID: 17058174.

6. Gut Image Study Group. Lim YJ, Moon JS, et al. Korean Society of Gastrointestinal Endoscopy (KSGE) guidelines for credentialing and granting previleges for capsule endoscopy. Korean J Gastrointest Endosc. 2008; 37:393–402.

7. Viazis N, Sgouros S, Papaxoinis K, et al. Bowel preparation increases the diagnostic yield of capsule endoscopy: a prospective, randomized, controlled study. Gastrointest Endosc. 2004; 60:534–538. PMID: 15472674.

8. Dai N, Gubler C, Hengstler P, Meyenberger C, Bauerfeind P. Improved capsule endoscopy after bowel preparation. Gastrointest Endosc. 2005; 61:28–31. PMID: 15672052.

9. Niv Y, Niv G. Capsule endoscopy: role of bowel preparation in successful visualization. Scand J Gastroenterol. 2004; 39:1005–1009. PMID: 15513342.

10. Ben-Soussan E, Savoye G, Antonietti M, Ramirez S, Ducrotté P, Lerebours E. Is a 2-liter PEG preparation useful before capsule endoscopy? J Clin Gastroenterol. 2005; 39:381–384. PMID: 15815205.

13. Selby W. Complete small-bowel transit in patients undergoing capsule endoscopy: determining factors and improvement with metoclopramide. Gastrointest Endosc. 2005; 61:80–85. PMID: 15672061.

14. Korman LY, Delvaux M, Gay G, et al. Capsule endoscopy structured terminology (CEST): proposal of a standardized and structured terminology for reporting capsule endoscopy procedures. Endoscopy. 2005; 37:951–959. PMID: 16189767.

15. Ahmad NA, Iqbal N, Joyce A. Clinical impact of capsule endoscopy on management of gastrointestinal disorders. Clin Gastroenterol Hepatol. 2008; 6:433–437. PMID: 18325843.

16. van Tuyl SA, van Noorden JT, Stolk MF, Kuipers EJ. Clinical consequences of videocapsule endoscopy in GI bleeding and Crohn's disease. Gastrointest Endosc. 2007; 66:1164–1170. PMID: 17904134.

17. Mergener K, Ponchon T, Gralnek I, et al. Literature review and recommendations for clinical application of small-bowel capsule endoscopy, based on a panel discussion by international experts. Consensus statements for small-bowel capsule endoscopy, 2006/2007. Endoscopy. 2007; 39:895–909. PMID: 17968807.

18. Maiden L, Thjodleifsson B, Theodors A, Gonzalez J, Bjarnason I. A quantitative analysis of NSAID-induced small bowel pathology by capsule enteroscopy. Gastroenterology. 2005; 128:1172–1178. PMID: 15887101.

19. Kornbluth A, Colombel JF, Leighton JA, Loftus E. ICCE. ICCE consensus for inflammatory bowel disease. Endoscopy. 2005; 37:1051–1054. PMID: 16189789.

20. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977; 33:159–174. PMID: 843571.

Go to :

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download