1. Thorpy M. International classification of sleep disorders. In : Chokroverty S, editor. Sleep Disorders Medicine: Basic Science, Technical Considerations and Clinical Aspects. 4th ed. New York, NY: Springer;2017. p. 475–484.

2. Šušmáková K. Human sleep and sleep EEG. Meas Sci Rev. 2004; 4(2):59–74.

3. Banno K, Kryger MH. Sleep apnea: clinical investigations in humans. Sleep Med. 2007; 8(4):400–426. PMID:

17478121.

4. Chervin RD. Sleepiness, fatigue, tiredness, and lack of energy in obstructive sleep apnea. Chest. 2000; 118(2):372–379. PMID:

10936127.

5. Graff-Radford SB, Newman A. Obstructive sleep apnea and cluster headache. Headache. 2004; 44(6):607–610. PMID:

15186306.

6. Lattimore JD, Celermajer DS, Wilcox I. Obstructive sleep apnea and cardiovascular disease. J Am Coll Cardiol. 2003; 41(9):1429–1437. PMID:

12742277.

7. Lal C, Strange C, Bachman D. Neurocognitive impairment in obstructive sleep apnea. Chest. 2012; 141(6):1601–1610. PMID:

22670023.

8. Freire AX, Kadaria D, Avecillas JF, Murillo LC, Yataco JC. Obstructive sleep apnea and immunity: relationship of lymphocyte count and apnea hypopnea index. South Med J. 2010; 103(8):771–774. PMID:

20622723.

9. Kapur V, Strohl KP, Redline S, Iber C, O'Connor G, Nieto J. Underdiagnosis of sleep apnea syndrome in U.S. communities. Sleep Breath. 2002; 6(2):49–54. PMID:

12075479.

10. Patil SP, Schneider H, Schwartz AR, Smith PL. Adult obstructive sleep apnea: pathophysiology and diagnosis. Chest. 2007; 132(1):325–337. PMID:

17625094.

11. Douglas NJ, Thomas S, Jan MA. Clinical value of polysomnography. Lancet. 1992; 339(8789):347–350. PMID:

1346422.

12. de Chazal P, Penzel T, Heneghan C. Automated detection of obstructive sleep apnoea at different time scales using the electrocardiogram. Physiol Meas. 2004; 25(4):967–983. PMID:

15382835.

13. Bacharova L, Triantafyllou E, Vazaios C, Tomeckova I, Paranicova I, Tkacova R. The effect of obstructive sleep apnea on QRS complex morphology. J Electrocardiol. 2015; 48(2):164–170. PMID:

25541278.

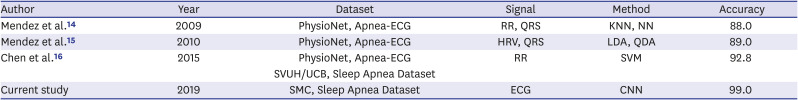

14. Mendez MO, Bianchi AM, Matteucci M, Cerutti S, Penzel T. Sleep apnea screening by autoregressive models from a single ECG lead. IEEE Trans Biomed Eng. 2009; 56(12):2838–2850. PMID:

19709961.

15. Mendez MO, Corthout J, Van Huffel S, Matteucci M, Penzel T, Cerutti S, et al. Automatic screening of obstructive sleep apnea from the ECG based on empirical mode decomposition and wavelet analysis. Physiol Meas. 2010; 31(3):273–289. PMID:

20086277.

16. Chen L, Zhang X, Song C. An automatic screening approach for obstructive sleep apnea diagnosis based on single-lead electrocardiogram. IEEE Trans Autom Sci Eng. 2015; 12(1):106–115.

17. Miotto R, Wang F, Wang S, Jiang X, Dudley JT. Deep learning for healthcare: review, opportunities and challenges. Brief Bioinform. 2018; 19(6):1236–1246. PMID:

28481991.

18. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In : Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition; 2016 June 27-30; Las Vegas, NV. Piscataway, NJ: Institute of Electrical and Electronics Engineers;2016. p. 770–778. DOI:

10.1109/CVPR.2016.90.

19. Wu R, Yan S, Shan Y, Dang Q, Sun G. . Deep image: scaling up image recognition. arXiv. 2015; 1501.02876.

20. Kiranyaz S, Ince T, Gabbouj M. Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans Biomed Eng. 2016; 63(3):664–675. PMID:

26285054.

21. Dey D, Chaudhuri S, Munshi S. Obstructive sleep apnoea detection using convolutional neural network based deep learning framework. Biomed Eng Lett. 2017; 8(1):95–100. PMID:

30603194.

22. Urtnasan E, Park JU, Lee KJ. Multiclass classification of obstructive sleep apnea/hypopnea based on a convolutional neural network from a single-lead electrocardiogram. Physiol Meas. 2018; 39(6):065003. PMID:

29794342.

23. Berry RB, Quan SF, Abreu A. The AASM Manual for the Scoring of Sleep and Associated Events: Rules, Terminology and Technical Specifications. Darien, IL: American Academy of Sleep Medicine;2012.

24. Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw. 2015; 61:85–117. PMID:

25462637.

25. van Laarhoven T. L2 regularization versus batch and weight normalization. arXiv. 2017; 1706.05350.

26. Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014; 15(56):1929–1958.

27. Zeiler MD, Ranzato M, Monga R, Mao M, Yang K, Le QV, et al. On rectified linear units for speech processing. In : Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing; 2013 May 26-31; Vancouver, Canada. Piscataway, NJ: Institute of Electrical and Electronics Engineers;2013. p. 3517–3521. DOI:

10.1109/ICASSP.2013.6638312.

28. Zou F, Shen L, Jie Z, Sun J, Liu W. Weighted AdaGrad with unified momentum. arXiv. 2018; 1808.03408.

29. Keras: the Python deep learning API. Updated 2015. Accessed March 24, 2019.

https://keras.io/.

30. Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, et al. TensorFlow: large-scale machine learning on heterogeneous distributed systems. arXiv. 2016; 1603.04467.

PDF

PDF Citation

Citation Print

Print

XML Download

XML Download