1. Ereso AQ, Garcia P, Tseng E, Gauger G, Kim H, Dua MM, et al. Live transference of surgical subspecialty skills using telerobotic proctoring to remote general surgeons. J Am Coll Surg. 2010; 211(3):400–411. PMID:

20800198.

2. Garcia P. Telemedicine for the battlefield: present and future technologies. In : Rosen J, Hannaford B, Satava RM, editors. Surgical Robotics: Systems Applications and Visions. Berlin: Springer;2011. p. 33–68.

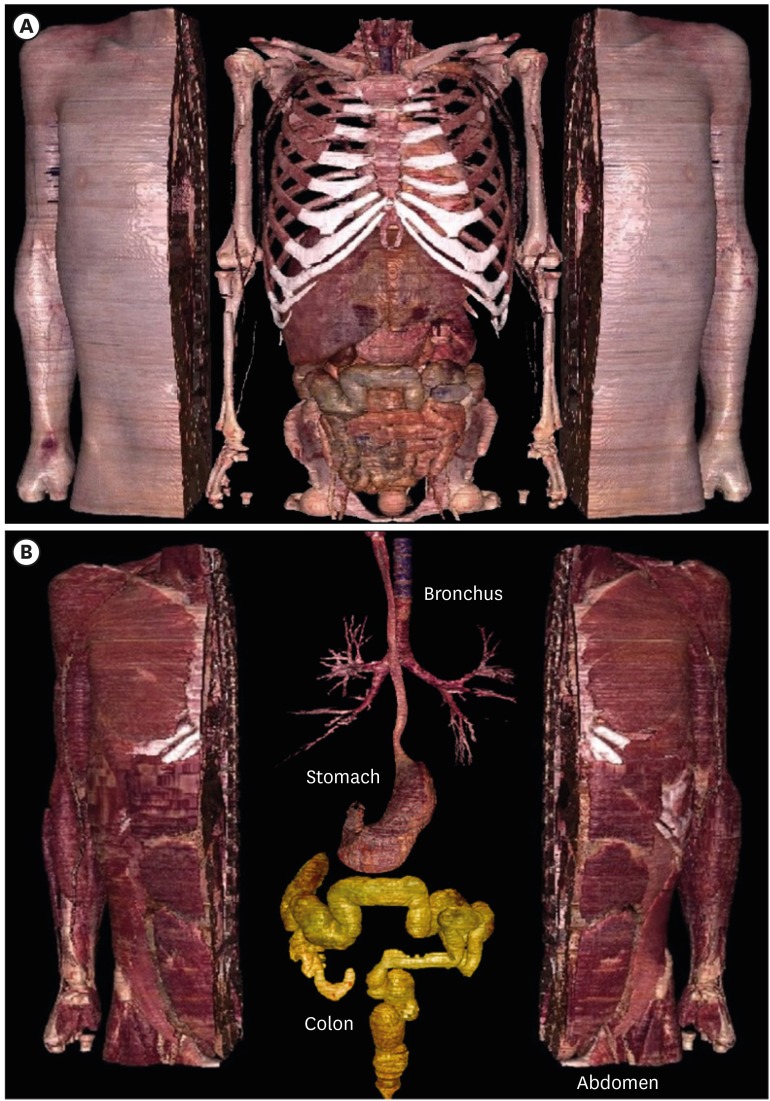

3. Gargiulo P, Helgason T, Ingvarsson P, Mayr W, Kern H, Carraro U. Medical image analysis and 3-D modeling to quantify changes and functional restoration in denervated muscle undergoing electrical stimulation treatment. Hum-Cen Comput Info. 2012; 2(10):1–11.

4. Bostanci E, Kanwal N, Clark AF. Augmented reality applications for cultural heritage using Kinect. Hum-Cen Comput Info. 2015; 5(20):1–18.

5. Park Y, Lee M, Kim MH, Lee JW. Analysis of semantic relations between multimodal medical images based on coronary anatomy for acute myocardial infarction. J Inform Proc Syst. 2016; 12(1):129–148.

6. Ren Z, Yuan J, Zhang Z. Robust hand gesture recognition based on finger-earth mover's distance with a commodity depth camera. In : Proceedings of the 19th ACM International Conference on Multimedia; New York, NY: Association for Computing Machinery;2011. p. 1093–1096.

7. Zhang Z. Microsoft Kinect sensor and its effect. IEEE Multimed. 2012; 19(2):4–10.

8. Anderson F, Annett M, Bischof WF. Lean on Wii: physical rehabilitation with virtual reality Wii peripherals. Stud Health Technol Inform. 2010; 154:229–234. PMID:

20543303.

9. Deutsch JE, Robbins D, Morrison J, Bowlby PG. Wii-based compared to standard of care balance and mobility rehabilitation for two individuals post-stroke. In : Proceedings of the Virtual Rehabilitation International Conference; Piscataway, NJ: Institute of Electrical and Electronics Engineers;2009. p. 117–120.

10. Potter LE, Araullo J, Carter L. The leap motion controller: a view on sign language. In : Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration; New York, NY: Association for Computing Machinery;2013. p. 175–178.

12. Nuwer R. Armband adds a twitch to gesture control. New Sci. 2013; 217(2906):21.

14. Lundström C, Rydell T, Forsell C, Persson A, Ynnerman A. Multi-touch table system for medical visualization: application to orthopedic surgery planning. IEEE Trans Vis Comput Graph. 2011; 17(12):1775–1784. PMID:

22034294.

15. Spitzer V, Ackerman MJ, Scherzinger AL, Whitlock D. The visible human male: a technical report. J Am Med Inform Assoc. 1996; 3(2):118–130. PMID:

8653448.

16. Ackerman MJ. The Visible Human project. A resource for education. Acad Med. 1999; 74(6):667–670. PMID:

10386094.

17. Park JS, Chung MS, Hwang SB, Lee YS, Har DH, Park HS. Visible Korean human: improved serially sectioned images of the entire body. IEEE Trans Med Imaging. 2005; 24(3):352–360. PMID:

15754985.

18. Chung MS, Kim SY. Three-dimensional image and virtual dissection program of the brain made of Korean cadaver. Yonsei Med J. 2000; 41(3):299–303. PMID:

10957882.

19. Shin DS, Park JS, Park HS, Hwang SB, Chung MS. Outlining of the detailed structures in sectioned images from Visible Korean. Surg Radiol Anat. 2012; 34(3):235–247. PMID:

21947014.

20. Park JS, Jung YW, Lee JW, Shin DS, Chung MS, Riemer M, et al. Generating useful images for medical applications from the Visible Korean Human. Comput Methods Programs Biomed. 2008; 92(3):257–266. PMID:

18782644.

21. Huang YX, Jin LZ, Lowe JA, Wang XY, Xu HZ, Teng YJ, et al. Three-dimensional reconstruction of the superior mediastinum from Chinese Visible Human Female. Surg Radiol Anat. 2010; 32(7):693–698. PMID:

20131053.

22. Zhang SX, Heng PA, Liu ZJ, Tan LW, Qiu MG, Li QY, et al. The Chinese Visible Human (CVH) datasets incorporate technical and imaging advances on earlier digital humans. J Anat. 2004; 204(Pt 3):165–173. PMID:

15032906.

23. Lim S, Kwon K, Shin BS. GPU‐based interactive visualization framework for ultrasound datasets. Comput Animat Virt W. 2009; 20(1):11–23.

24. Park JS, Chung MS, Hwang SB, Lee YS, Har DH, Park HS. Visible Korean Human: improved serially sectioned images of the entire body. IEEE Trans Med Imaging. 2005; 24(3):352–360. PMID:

15754985.

25. Shin DS, Jang HG, Hwang SB, Har DH, Moon YL, Chung MS. Two-dimensional sectioned images and three-dimensional surface models for learning the anatomy of the female pelvis. Anat Sci Educ. 2013; 6(5):316–323. PMID:

23463707.

26. Schiemann T, Freudenberg J, Pflesser B, Pommert A, Priesmeyer K, Riemer M, et al. Exploring the Visible Human using the VOXEL-MAN framework. Comput Med Imaging Graph. 2000; 24(3):127–132. PMID:

10838007.

27. Park JS, Chung MS, Hwang SB, Lee YS, Har DH, Park HS. Technical report on semiautomatic segmentation using the Adobe Photoshop. J Digit Imaging. 2005; 18(4):333–343. PMID:

16003588.

28. Felzenszwalb PF, Huttenlocher DP. Distance transforms of sampled functions. Theory Comput. 2012; 8:415–428.

29. Maurer CR, Qi R, Raghavan V. A linear time algorithm for computing exact Euclidean distance transforms of binary images in arbitrary dimensions. IEEE T Pattern Anal. 2003; 25(2):265–270.

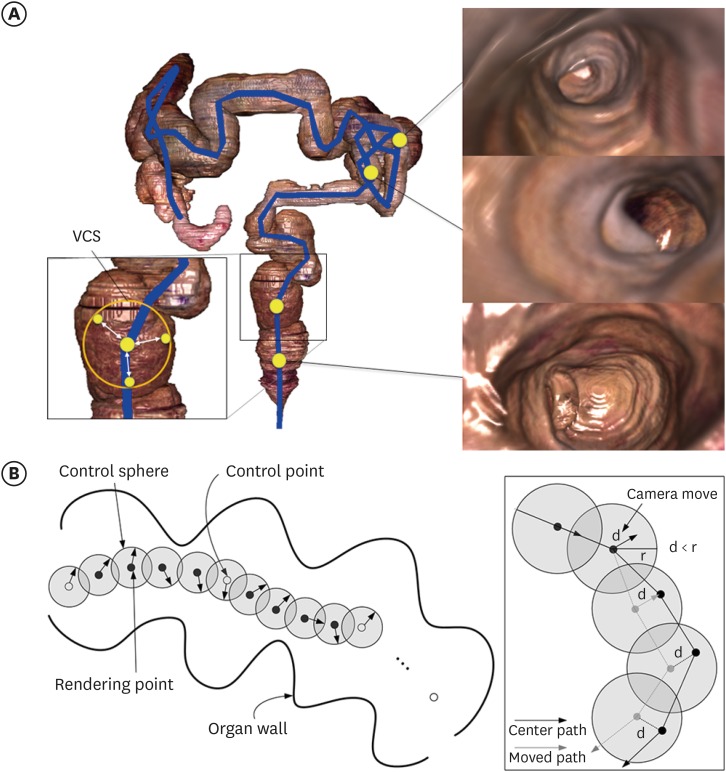

30. Kwon K, Shin BS. An efficient navigation method using progressive curverization in virtual endoscopy. Int Congr Ser. 2005; 1281:121–125.

31. Barry PJ, Goldman RN. A recursive evaluation algorithm for a class of Catmull-Rom splines. In : ACM SIGGRAPH 88 Computer Graphics: Conference Proceedings; New York, NY: Association for Computing Machinery;1988. p. 199–204.

32. Ruppert GC, Reis LO, Amorim PH, de Moraes TF, da Silva JV. Touchless gesture user interface for interactive image visualization in urological surgery. World J Urol. 2012; 30(5):687–691. PMID:

22580994.

33. Chiang PY, Chen CC, Hsia CH. A touchless interaction interface for observing medical imaging. J Vis Commu Image R. 2019; 58:363–373.

34. Klapan I, Klapan L, Majhen Z, Duspara A, Zlatko M, Kubat G, et al. Do we really need a new navigation-noninvasive “on the Fly” gesture-controlled incisionless surgery? Biomed J Sci Tech Res. 2019; 20(5):15394–15404.

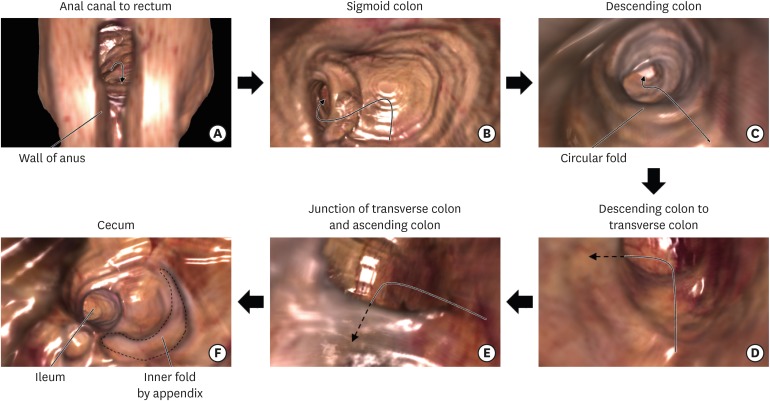

35. Chung BS, Chung MS, Park HS, Shin BS, Kwon K. Colonoscopy tutorial software made with a cadaver's sectioned images. Ann Anat. 2016; 208:19–23. PMID:

27475426.

PDF

PDF Citation

Citation Print

Print

XML Download

XML Download