INTRODUCTION

Detection of the open or closed status of the eye is very important in many areas, such as human-device interface or driver drowsiness detection system.

13) Monitoring of the patient's eye opening is also important in the medical field. Eye blinking can create an artifact on electroencephalogram (EEG) or ocular magnetic resonance imaging (MRI).

115) As well as, blinking is a useful communication channel for severely disabled patients. In general, quadriplegic patients use their voices to find the caregiver. However, aggressive quadriplegic patients are mostly in a state of tracheostomy, because of prolonged ventilator care or difficult excretion of sputum. The patient in tracheostomy state cannot generate speech. These patients require other communication methods to call caregivers.

Recently, there has been a remarkable advance in artificial intelligence (AI).

12) Especially, image recognition using AI with deep learning algorithms (DLAs) has been increasingly applied in the various medical fields.

7) These fields include radiographic images such as X-ray or MRI, histological classifications, and endoscopic findings.

45712) To monitor the patient's eye status, some studies have been reported to use these DLA.

8) We created a new eye status monitoring system using a DLA. Further, we applied this algorithm to develop a communication system for quadriplegic patients to call the caregiver.

Go to :

RESULTS

The DLA of Google Tensorflow, trained with 18,000 images, distinguished the open and close status of the test eye images with a probability of by 96.1–98.7%. The results of each of the Tensorflow optimizers are as follows: Gradient Descent optimizer=96.05, RMSProp optimizer=98.1, and Adam optimizer=98.7.

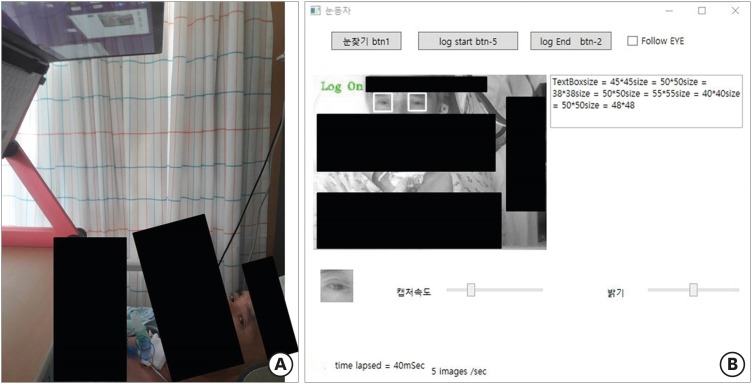

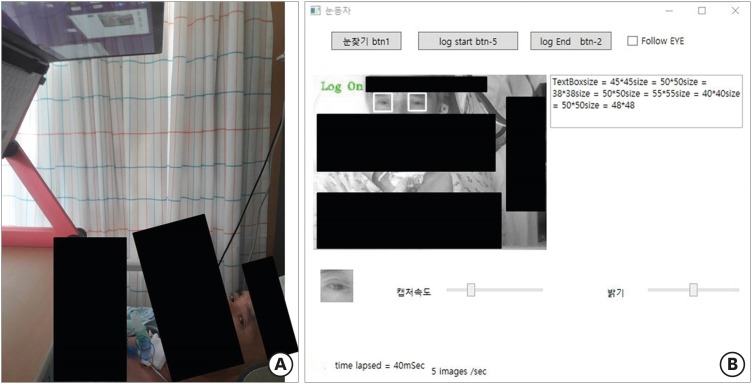

In the absence of a caregiver, the patient viewed the webcam and closed the left eye for 3 seconds, and a sound was generated to call the caregiver (

FIGURE 4). In a total of 150 attempts, there was no malfunction, thus the success rate was 100%.

| FIGURE 4 Practical applications in a quadriplegic patient. (A) Actual setting for the quadriplegic patient. (B) Window of the software. Click ‘btn 1’ button to recognize both eyes. Click ‘log start’ button to recognize eye status, and call the caregiver. When the left eye was closed for 3 seconds, a sound was generated to call the caregiver. The ‘log end’ button was used to stop distinguishing eye status.

|

Go to :

DISCUSSION

Methods determining the closed status of an eye are largely divided into non-image-based and image-based technique.

8) A typical non-image-based technique is EEG.

1) The advantage of this technique is fast data collection. However, a few disadvantages of this technique include discomfort following attachment of sensors to the body or low accuracy due to noise generated by patient's motion. Thus, many studies have been performed using an image-based technique to overcome these drawbacks.

8)

Image-based techniques to determine eye opening status were divided into a video-based method and a single-image-based methods. Various video-based methods have yielded high accuracy. However, they were time-consuming and required excessive computation.

8)

Single-image-based methods were divided into non-training and training techniques. One of the most popular applications of single-image-based methods (non-training techniques) was iris detection.

2) The main advantage of these techniques was that additional training process was not required. However, the disadvantage was that the performance of these techniques might be negatively influenced if appropriate extraction failed to elicit the requisite information to distinguish eye status from a single image, which might limit the optimization of image-acquisition when extracting features.

8) On the other hand, the advantage of single-image-based methods (training techniques) was shorter processing time compared with video-based methods and was more accurate than non-training techniques. However, training techniques were difficult to find an optimal feature extraction method.

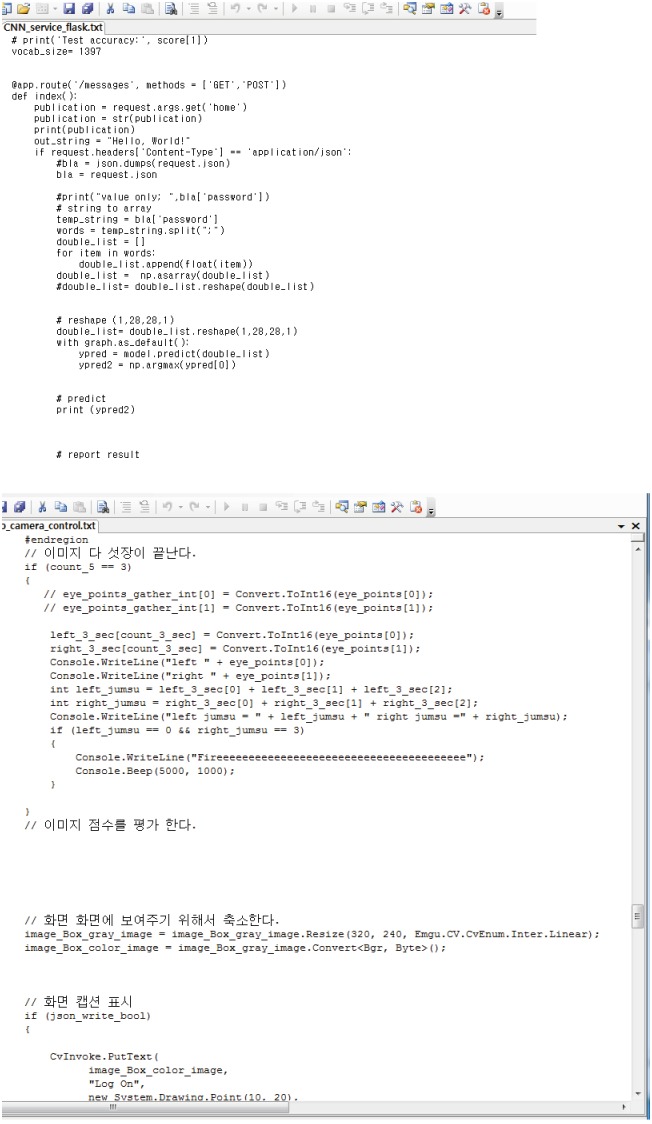

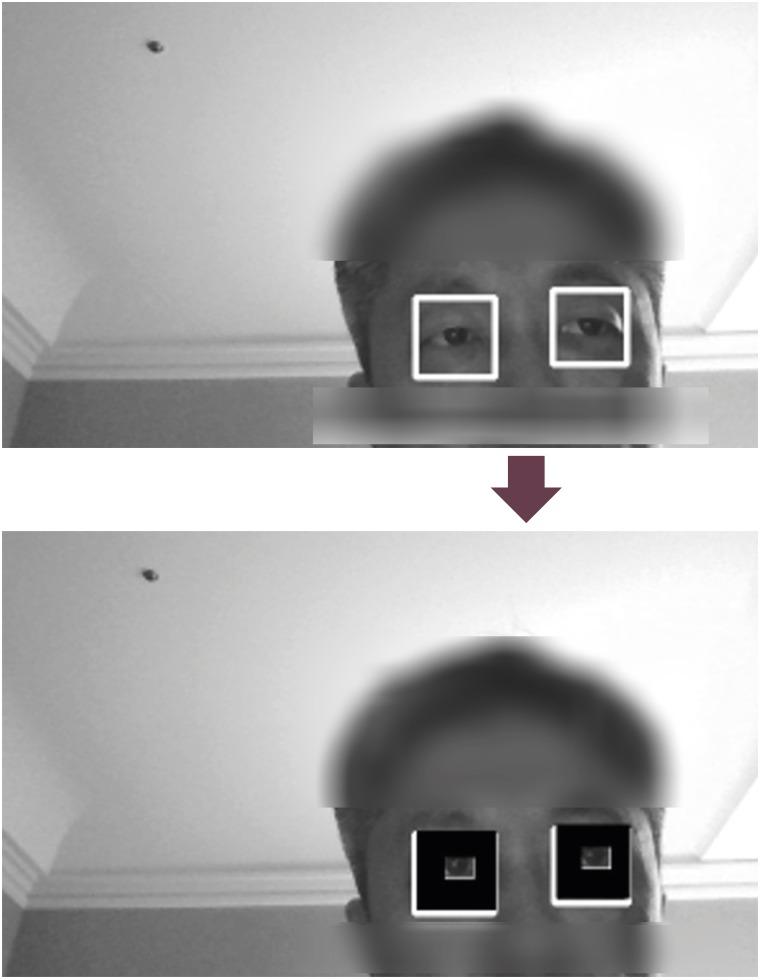

8) To resolve this problem, we considered the CNN-based methods. CNN is a type of deep learning that is widely used in the field of image recognition, which facilitated the automatic acquisition of optimal features from training data by both filters and classifiers.

89) Extraction was automatically acquired from training data.

8) We used TensorFlow in several types of CNN models. TensorFlow is an open source deep learning platform made by Google. It is widely used for both research and commercial applications industrially.

3)

Our DLA determines whether the eye is open or closed fairly accurately, but it is not perfect. The error rate of the method to confirm eye status using CNN is reported to be 0.24–0.91%.

8) Therefore, an additional process is required depending on the application. In our study, 5 eye images were captured in one second, and in case 3 or more images were identical, it was decided whether or not to open for 1 second. If 3 or more pictures are recognized incorrectly, the single-second eye status is recognized incorrectly. Since the error rate of eye status detection from a picture was 1.3% in this study, the error rate of eye status detection per second was 0.002% (0.987

2×0.013

3×10 +0.987×0.013

4×5+0.013

5). If this process was performed continuously for 3 seconds, the success rate of sound generation was 99.99% {(1−0.00002)

3}. Thus, it was almost 100% likely to determine that a single eye was closed continuously for 3 seconds.

It would be unnecessary to develop devices simply to determine whether the eyes opened or closed once or twice. However, it is important to confirm continuous eye status in specific areas. For example, monitoring the eye status may be essential for a device that detects drowsiness while driving or when engaged in dangerous work.

13) In the medical area, whether the eyes status may affect the result of the examination. A typical example is EEG.

1) Waves can vary depending on the state of the eye, when the EEG test is performed. Therefore, a few studies were conducted on a device for confirmation of the eye status during the EEG before AI was widely known.

1) Using our methods, the eye status can be determined via the webcam by the time even if the patient lacked a special monitoring device. In severe patients who can move only with the eyes, it is very important to confirm whether the eyes are open or closed. Diseases that cause this condition include complete cervical spinal cord injury, amyotrophic lateral sclerosis, and locked-in-syndrome.

61011) These patients need constant caregivers. However, since caregivers are humans, they cannot constantly watch the patients. Thus, both the patient and caregiver benefit from a device that generated sound to call the caregiver when needed. Therefore, we have designed this application. In our study, the application only produced a sound to call the caregiver.

Recently, commercial eye-tracking computing systems (ETCSs) have been developed and applied to quadriplegic patients.

14) In addition to calling the caregiver, ETCSs can help these patients through various other functions. However, such devices and software are expensive because the number of patients needed is few. The method used in this study cannot perform the eye-tracking, but can be implemented in a common office laptop with the webcam.

This system has some limitations. At first, this software is only implemented in Windows and is not compatible with Android or iOS for iPhone, which are widely used in mobile devices. This software cannot run concurrently with other software programs. Secondly, excessive head movement while running the software prevents this system from recognizing eyes on the face. Further, if the distance between the webcam and the face is too far or close, the software may not be able to recognize the face. And, the state of lighting affects facial and eye recognition. Finally, the number of patients who applied this system was small because the incidence of patients with these conditions is generally very low. Thus, further studies for more patients are needed.

Go to :

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download