I. Introduction

Electrocardiograms (ECGs) are commonly performed cardiology tests that record the electrical activity of the heart over a period using electrodes [

1]. These electrodes detect small electrical changes caused by depolarization and repolarization in the electrophysiological pattern of the heart muscle during each heartbeat [

1]. ECGs are widely used for various purposes, including measuring heart rate consistency, size, and location; identifying damage to the heart; and observing the effects of devices, such as pacemakers or heart-regulating medications. The information obtained via ECGs can also be used for medical diagnosis. ECGs represent the best method for measuring and diagnosing abnormal heart rhythms [

2]—an especially useful trait when applied to the measurement damaged conduction tissues that transmit electrical signals [

3]. ECGs can be used to detect damage to specific portions of the myocardium during myocardial infarctions [

4]. Furthermore, digitally gathered and stored ECG data can be used for automatic ECG signal analysis [

5].

ECG signals, however, are frequently interrupted by various types of noise and artifacts (

Figure 1A–1C). Previous works have classified these into common artifact types [

678], such as baseline wandering (BA), muscle artifacts (MA), and powerline interference (PLI). Subject movements or respiratory activities cause BA, which manifests as slowly wandering baselines primarily related to random body movements. ECGs with MAs are contaminated with muscular contraction artifacts. PLIs, caused by electrical power leakage or improper equipment grounding, are indicated by varying ECG amplitudes and indistinct isoelectric baselines. Because such noise or artifacts may lead to disturbances of further automatic ECG signal analysis, their detection and elimination is of great importance, as this could prevent ECG noise-related misclassifications or misdiagnosis.

Previous studies have attempted to de-noise ECG signals using a wide range of approaches, including wavelet transformation [

91011], weighted averages [

1213], adaptive filtering [

14], independent component analysis [

15], and empirical mode decomposition (EMD) [

16171819]. However, existing methods have several noise-removal limitations [

20]. For example, EMD-based approaches may filter out p- and twaves. Adaptive filters, proposed by Rahman et al. [

21], can apply filters such as the signed regression algorithm and normalized least-mean square, but they encounter difficulties obtaining noise-signal references from a typical ECG signal acquisition.

Recent ECG-related research has required a substantially different approach to noise because the scale of data collection has become very large. The Electrocardiogram Vigilance with Electronic data Warehouse II (ECG-ViEW II) released 979,273 ECG results from 461,178 patients, with plans to add all 12-lead data in the next version [

2223]. More recent attempts have, additionally, been made to acquire ECG measurements from patient monitoring equipment in intensive care units (ICUs) [

2425]. The MIMIC III (Medical Information Mart for Intensive Care) dataset contains 22,317 waveform records (ECG, arterial blood pressure [ABP] waveforms, fingertip photoplethysmogram [PPG] signals, and respiration). The biosignal repository used in this study collected 23,187,206 waveform records (like MIMIC III, all kinds of waveforms captured in the ICU were included) from over 8,000 patients with an observational period of approximately 517 patient-years as of October 2018.

A different approach to noise was thus believed to be required. First, because there were sufficient data, researchers did not need to use de-noised data, which might still contain incorrect information (some real-world signals captured noise exclusively without any ECG information, as shown in

Figure 1C). Second, a deep learning algorithm is needed. Deep learning algorithms possess multiple advantages. They do not require feature extraction processes performed by domain experts; the abovementioned biosignal repositories collected ECG alongside many other biosignal data types (such as respiration, ABP, PPG, and central venous pressure [CVP]), and because each type of waveform possesses unique characteristics, it requires a customized algorithm. Because a feature extraction process is unnecessary, it is easier to develop a deep learning-based algorithm to screen unacceptable signals than to develop an algorithm for each signal.

In this context, a new unacceptable ECG (ECG with noise) detection and screening deep learning-based model for further automatic ECG signal analysis was developed in this study. In the development process, we minimized the manual review effort of the medical expert by pre-screening ECG data using non-experts.

II. Methods

Informed consent was waived in this study by the Ajou University Hospital Institutional Review Board (No. AJIRB-MED-MDB-16-155). Only de-identified data were used and analyzed, retrospectively.

1. Data Source

The data used for this study were obtained from a biosignal repository constructed by us through our previous research [

25] (

Figure 2). From September 1, 2016 to September 30, 2018, the biosignal data collected from the trauma ICU of Ajou University Hospital comprised a total of 2,767,845 PPG, measuring peripheral oxygen saturation (SpO

2); 2,752,382 ECG lead II; 2,597,692 respiration data; 1,864,864 ABP; and 754,240 CVP (each indicating 10-minute data files). Because the data were collected from the trauma ICU, most patients were admitted because of physical trauma rather than any disease the patients had. Approximately 52.1% of patients in the trauma ICU experienced surgery to repair physical damage to their bodies. The average ± standard deviation of the age of the patients was 50.7 ± 20.5. Males constituted 73.0% of the patients.

2. Unacceptable ECG (ECG with Noise) Definition

The ECG waveforms were classified into two types: acceptable or unacceptable. An acceptable waveform was defined as a waveform in the normal category that is able to be used for further analysis. Unacceptable waveforms included the following subtypes: (1) BA, waveforms with variations in the measured voltage baseline; (2) MA, partial noise caused by patient movements or body instability; (3) PLI, noise generated across the entire waveform owing to close contact between the measurement sensor and voltage interference; (4) unacceptable (other reasons), waveforms not categorized as normal waveforms because of other causes; and (5) unclear, waveforms in which the preceding type-judgements were inappropriate. All waveforms that were not acceptable were classified as unacceptable (ECG with noise).

3. Labeling Tool Development

A web-based tool was developed to label the two types of ECG waveforms defined above. This facilitated rapid evaluation and efficient management in labeling the results of each ECG signal (

Figure 3). The tool displayed a 10-second ECG result, allowing the evaluator to select one of the two types: acceptable or unacceptable. Using these tools, randomly selected ECGs were reviewed by two non-experts and a medical expert. A short pre-training (approximately 10 minutes) session was conducted for each non-expert evaluator. Labeling results were manually reviewed and corrected by a medical expert before they were used for model training (see Section II-4 and

Figure 2).

4. Training, Validation, and Test Datasets

The datasets for model development training and validation were initially reviewed by two non-experts. If one evaluator classified an ECG as unacceptable, the ECG was considered unacceptable even if the other disagreed. From 15,400 labeled ECGs, 13,485 (87.6%) were classified as acceptable by the non-experts. Among these pre-screened ECGs, 2,700 were randomly sampled ECGs with a 50:50 balance ratio (acceptable:unacceptable) to adjust the imbalance between them in the real-world dataset. The sampled ECGs were confirmed via manual review by a medical expert. These ECGs were randomly divided into a training dataset (2,400 ECGs) and a validation dataset (300 ECGs) for 9-fold cross-validation (

Figure 2). The test dataset (300 ECGs) was randomly sampled from ECGs gathered from various periods and data from patients whose data were included in the training or validation dataset were excluded (

Figure 3). The data were also evaluated as acceptable or unacceptable by a medical expert using the tool described above. Finally, the dataset for 9-fold cross-validation (training and validation) and test datasets were generated in a ratio of 9:1.

5. Deep Learning Model Development

1) Model development

Waveform data representing 10-second ECGs were used as input because they were labeled for this timespan. For the original ECGs, there were 2,500 data points because they were measured at 250 Hz. Sampling was performed at a 50% ratio to reduce input size, and the input data was ultimately defined as a 1 × 1250 vector. In addition, the frequency domain information of the ECG signals was also taken into consideration. The ECG signal energy in each frequency band was extracted using fast Fourier transform (FFT), and the structure of the transformed data was the same as that of a 1 × 1250 vector (real part of the double-sided FFT results). The input data were normalized via min-max normalization.

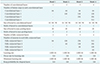

Because convolutional neural network (CNN) models have not previously been used for unacceptable ECG signal detection, there are no references on an optimal architecture. Therefore, we tested four different internal architectures, shown in

Figure 4 and

Table 1, moving from simple to more complex networks. In model architecture #1, which is the simplest approach, the network consists of a single convolutional layer with 64 feature maps for the time domain and frequency domain data. Furthermore, a single fully connected layer with 64 neurons is used to combine the time domain and frequency domain information. The number of convolutional layers, feature maps, fully connected layers, and neurons gradually increase in model architectures #2, #3, and #4. The ReLU activation function was used in all the fully connected layers, and a final layer that outputs the binary classification result (i.e., acceptable or not) by using the softmax activation was also present. A threshold of 0.5 was used for the probabilities from the softmax layer to classify unacceptable (= 1) and acceptable (= 0). To define the optimum size of the kernel in the convolutional layers, we also tested three different sizes (16, 48, and 96) in all the models.

2) Model optimization

A cross-entropy loss function was selected as the cost function. The adaptive moment estimation (Adam) optimizer (learning rate = 0.0001, decay = 0.8, minimum learning rate = 0.000001) was used to train the model. We repeated the training process up to 100 epochs with a batch size of 200 (i.e., up to 600 iterations). During the iterations, the results that showed the lowest loss in the validation set was selected for the finally trained model.

To evaluate the robustness of the results, we conducted 9-fold cross-validation with variation of the training and validation datasets. The model that exhibited the lowest loss in cross-validation was selected as the final model, and its performance was evaluated.

6. Performance Evaluation

Model performance was evaluated via comparison with the test dataset of 300 ECG signals labeled by a medical expert. The performance evaluation considered unacceptable screening as a positive value and calculated the sensitivity, specificity, positive and negative predictive values (PPV and NPV), F1-score, and the area under the receiver operating characteristic (AUROC) curve. Sensitivity (true positive rate) refers to the ability of the model to correctly detect unacceptable ECGs not meeting the gold standard. Specificity (true negative rate) indicates the ability of the model to correctly reject acceptable ECGs identified as accurate. PPV and NPV represent the proportion of true to modeled positive and negative results, respectively. The F1-score is the harmonic average of the sensitivity and PPV.

7. Cutoff for Classifying Unacceptable ECGs

To set an appropriate cutoff to screen out as many unacceptable ECGs as possible, even at the expense of some acceptable ECGs, we conducted sensitivity analysis on the cutoff and determined the optimal cutoff for our study.

8. Software Tools and Computational Environment

MS-SQL 2017 was used for data management, and Python (version 3.6.1) and the TensorFlow library (version 1.2.1) were used to develop the data preprocessing and deep learning models. The machine used for model development had one Intel Xeon CPU E5-1650 v4 (3.6 GHz), 128 GB RAM, and one GeForce GTX 1080 graphics card.

III. Results

Among the model architectures, model architecture #3 exhibited the best average cross-validation accuracy and loss (

Figure 5 and

Supplementary Table S1). With increasing model architecture complexity, accuracy increased, and loss decreased. With model architecture #4, however, neither the accuracy nor the loss improved. During evaluation of the kernel size in the convolutional layers, a smaller size showed better performance in all models. Therefore, model architecture #3 with a kernel size of 16 was selected as the final model architecture.

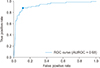

When the final model showing the lowest loss in crossvalidation among models with model architecture #3 was applied to the gold standard dataset (test dataset), it gave a result of 0.93 AUROC (

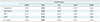

Figure 6). In the sensitivity analysis to set the cutoff to classify unacceptable ECGs, when 0.05 was used as the cutoff value, high sensitivity was achieved with reasonable performance in the other indexes (

Table 2).

When 0.05 was used as the cutoff value, 88% of the unacceptable ECGs were detected, and 11% of the acceptable data were incorrectly evaluated as unacceptable (

Table 3). The signals defined as unacceptable by the algorithm were 74% unacceptable and 26% misclassified acceptable waveforms. In contrast, only 9 of 76 unacceptable ECGs in the test dataset were misclassified as acceptable, and 96% of the signals evaluated as acceptable by the model were acceptable.

The time required to evaluate the 300 gold-standard dataset signals was 0.48 seconds, taking an average of 0.58 ms for each ECG.

IV. Discussion

This study developed a deep learning model for screening unacceptable ECG waveforms. The developed model was able to identify most (88%) of the unacceptable ECGs detected by a clinical expert. Because the time required to analyze a single 10-second waveform is only approximately 0.58 ms, this model can be used in real-time and for large volume ECG analysis.

Previous studies have primarily attempted to de-noise ECG signals [

26], for example, using principal component analysis (PCA) to abstract original signal data into a few eigenvectors with low noise-levels. A discrete wavelet transformation (DWT) concentrated on true signals, which possessed larger coefficients than noise data; however, threshold definition was critical. Other methods, such as wavelet Wiener filtering and pilot estimation, have also been used.

The background to the problem addressed in this study is different from that of existing attempts. As described earlier, there are now sufficient data to establish the groundwork for a future algorithm to achieve exclusively accurate data, without input noise. This is because when noisy input is entered into a learning model, its performance declines. This study therefore aimed to leave only clean data as much as possible, even at the expense of some acceptable data. Additionally, a significant portion of noise in real-world ECG data is generated not by alterations from specific effects, but data rendered unacceptable because they were of a type of systemgenerated abnormal signal, as shown in

Figure 1C. These data points cannot be de-noised, as they are exclusively noise without any true ECG signal information.

Our approach, which minimizes intervention of domain experts by conducting non-experts' pre-screening, would be also viable for application to other biosignals. Because the enrollment of medical expertise is also accompanied by high costs, it was necessary to test whether accurate noise evaluations could be developed while reducing the effort required of medical expertise. Conventional de-noising or quality assessment methods have been designed considering the features of specific waveforms; however, they require much effort from domain experts, which could be a barrier to the development of a model that can be applied to a wide variety of biosignals.

Our results in CNN model optimization suggest that deeper but smaller convolutional filter (kernel) sizes provide better performance. This finding was also observed in another domain, image recognition. The VGG-16 model, which won first place in the ImageNet Challenge 2014, improved the performance by increasing network depth with very small convolutional filters [

27].

In analysis of ECG signal, we chose 1D CNNs rather than the 2D CNN with the spectrogram of models because we assumed that noise or unacceptable ECG signal is independent from time. For the same reason, we did not use recurrent neural network (RNN)-based models (long short-term memory models, gated recurrent unit, etc.) and only focused on the morphological characteristics of signal.

In addition, to evaluating the advantages of using both time and frequency domain input rather than time domain data only or frequency domain data only, we conducted ad hoc analysis by using time domain data only or frequency domain data only in model architecture #3, which showed the best performance in our study. Based on the results, we confirmed that accuracy and loss were better in 9-fold cross-validation when both data were used together (in mean ± standard deviation; accuracy = 0.95 ± 0.02, loss = 0.12 ± 0.03) than when only time domain data (accuracy = 0.93 ± 0.02, loss = 0.22 ± 0.06) or only frequency domain data was used (accuracy = 0.94 ± 0.02, loss = 0.19 ± 0.05).

Some specific waveforms due to pathology status may have been screened as unacceptable. In some cases, signal modification caused by cardiovascular system diseases might be quite similar to those affected by noise or artifacts (for example, atrial fibrillation). Therefore, we conducted ad hoc analysis by applying the proposed model to the results of portable ECGs that include interpretation by the ECG machine [

23]. When our model was applied to the randomly selected 10,000 ECG lead II data, 3,337 ECGs were classified as unacceptable. Further, we could observe the tendency that waveforms of certain types of arrhythmia are likely to be classified as unacceptable (

Table 4). However, there is a possibility that situations of measuring ECGs for arrhythmic patients or the statuses of arrhythmic patients were less stable and led to more unacceptable ECGs. Therefore, this model would be proper for filtering noise data for the preparation of a noise-free training dataset. If this model is used to filter noise signal before the application of certain arrhythmia detection models, sufficient input data must be prepared considering that the filtering rate could be high in pathologic situations.

The proposed model may be used in two ways: planted in monitoring devices, or to process centrally collected data. As this model runs very quickly on an Intel Xeon CPU E5-1650 v4 (3.6 GHz) with 128 GB RAM and one GeForce GTX 1080 graphic card computing environment, time constraints would remain insignificant in the latter application because central systems would possess sufficient computing power. In the near future, monitoring devices themselves will analyze signals and provide warnings of danger. Because prediction models are highly complex, the proposed unacceptable ECG detection model needed to be as computationally simple as possible. Various approaches attempted previously in this regard [

28] require further research.

This study also encountered the following limitations. First, the test dataset used as the gold standard was generated by only one expert. As previously mentioned, this study did not aim to diagnose diseases requiring a high level of domain knowledge; thus, the gap between different experts should not be significant. However, there was no supplemental evaluation to correct for potential mistakes. Second, the specific category of noise was not evaluated. Noise could be classified into five categories: BA, MA, PLI, unacceptable, and unclear. However, data collection in an actual clinical environment resulted in a majority of normal waveform points and a small volume of noise data. Therefore, the data were insufficient for the deep learning model to learn to classify all defined categories. Furthermore, the noise categories were integrated without distinction because distinguishing between noise causes in actual applications was inconsequential (as this study aimed to eliminate all unacceptable signals, regardless of their cause). All waveforms evaluated as non-acceptable were thus also integrated as unacceptable ECGs. Finally, the PPV was not quite as high as 0.74, meaning that many of the unacceptable ECGs identified by the proposed algorithms were truly acceptable ECGs. However, as mentioned above, this study aimed to increase sensitivity, and some normal waveform loss was acceptable because these data were sufficiently represented. Instead, the proposed algorithm was able to ensure high sensitivity and successfully screened 88% of unacceptable ECGs.

In conclusion, this study developed a model capable of efficiently detecting unacceptable ECGs. The developed unacceptable ECG detection model is expected to provide a first step for future automated large-scale ECG analyses.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download