Abstract

Objectives

Our school introduced a new curriculum based on faculty-directed, intensive, small-group teaching of clinical skills in the third-year medical students. To examine its effects, we compared the mean scores on an OSCE between the third- and fourth-year medical students.

Methods

Third- and fourth-year students did rotations at the same five OSCE stations. They then completed a brief self-reporting questionnaire survey to examine the degree of satisfaction with new curriculum in the third-year students and clinical practice in the fourth-year students, as well as their perception of confidence and preparedness. We analyzed the OSCE data obtained from 158 students, 133 of whom also completed the questionnaire.

Results

Mean OSCE scores on the breast examination and wet smear stations were significantly higher in the third-year group (P < 0.001). But mean OSCE scores of motor-sensory examination and lumbar puncture were significantly higher in the fourth-year group (P < 0.05). The mean OSCE scores had no significant correlation with satisfaction. In addition, the self-ratings of confidence had a high degree of correlation with satisfaction with new curriculum (r = 0.673) and clinical practice (r = 0.692). Furthermore, there was a moderate degree of correlation between satisfaction and preparedness in both groups (r = 0.403 and 0.449).

Conclusions

There is no significant difference in the effect on the degree of clinical performance and confidence between an intensive-small group teaching and a 1-year clinical practice. If combined, intensive small group teaching and clinical practice would be useful to improve the degree of ability and confidence in medical students.

The Korean Medical Licensing Exam (KMLE) has been a written exam over the past six decades. In 2006, the Korean Ministry of Health and Welfare (KMHW) declared that it would also contain a skills test after 2010.1 The National Health Personnel Licensing Examination Board (NHPLEB) is required to gradually prepare the above examination system and maintain its quality.2 This change in the examination has caused changes in the curriculum of clinical education in Korean medical schools. There is a wide range of clinical skills needed by third- and fourth-year medical students to be equipped for becoming primary physicians. The objective structured clinical examination (OSCE) is a valid tool for assessing the student's clinical performance on these necessary skills and it can also identify learning deficiencies during general practice.34

Gyeongsang National University Medical School has had an embedded OSCE since 1998. Third-year medical students took the OSCE twice in July and December of 2008, all of whom passed it. However, a questionnaire survey suggested that the OSCE may lack the ability to screen learning deficiencies in the clinical skills. Moreover, thirdand fourth-year medical students still lack confidence in their clinical skills although they do rotations at clinical departments throughout the year. To resolve these problems, we have introduced a new curriculum, “Introduction to Clinical Practice”, featuring a 5-day course of faculty-directed intensive small-group teaching of 38 clinical and communication skills in the third-year students at the start of the new semester.

We conducted the present study to examine the effectiveness of the new curriculum with the original clinical training course by comparing the mean OSCE scores between the third-year (new curriculum) and fourth-year (old curriculum) students. We also examined the correlations between the mean OSCE scores and satisfaction ratings of the new curriculum in the third-year students, and between the mean OSCE scores and satisfaction ratings of the original 1-year clinical practice in the fourth-year students and those between the self-confidence and preparedness based on the OSCE.

In Korea, the first semester begins in March. All third-year students participated in the new curriculum until late February of 2009. The curriculum is composed of half-day lecture, hospital rounding, 2-day course of communication skills (bad news delivery and abdominal pain with standardized patients) and 5-day course of 38 clinical procedure skills practice. These 38 procedural skills were divided by clinical departments (Table 1) and ten rooms were prepared for rotations (two rooms per day). In the room, the professor examined students' practice of the clinical procedures and gave a direct feedback to the group. Groups varied in size from 7 to 9 in a single room at each rotation.

The fourth-year students completed their third-year curriculum with a 1-year clinical course (internal medicine, general surgery, pediatrics, obstetrics-gynecology, psychiatry, neurology, diagnostic laboratory, radiology and emergency medicine) by early February of 2009. They took several clinical skills tests while doing rotations at clinical departments such as general surgery and neurology.

A total of 86 third-year and 72 fourth-year medical students took the OSCE. We divided the students into two groups: the third-year group (n = 86) and the fourth-year group (n = 72). We embedded five OSCE stations (breast examination, motor-sensory examination, wet smear, lumbar puncture and blood culture) that were the same for both classes of students and seven OSCE stations that were different for the two groups in March 7, 20 09 for third-year medical students (n = 86) and March 14, 2009 for fourth-year medical students (n = 72).

After the OSCE, the students completed a brief self-report questionnaire about satisfaction with the OSCE stations, their self-assessments of their confidence and their preparedness on a 10-point scale: 0 point (‘Don't agree’) and 9 points (‘Excellent’).

Statistical analysis was done using the SPSS 18.0 for Windows (SPSS Inc., Chicago, IL). Mann-Whitney U-test was used to compare the mean OSCE scores between the two groups. Moreover, a Pearson's correlation coefficients were calculated to analyze the correlation between satisfaction ratings of each station and all the OSCE stations, their perception of confidence and preparedness. A P-value of < 0.05 was considered statistically significant.

A total of 158 students (86 third-year and 72 fourth-year medical students) took the OSCE and 133 students (61 third-year and 72 fourth-year medical students) responded a self-reporting questionnaire survey. Male students were 57% in third-year group and 56.9% in fourth-year group. Mean age was 32.8 years old in third-group and 28.4 years in fourth-year group. There were no significant differences in the age and sex between the two groups.

Mean OSCE scores of breast examination and wet smear were significantly higher in the third-year group as compared with the fourth-year group (P < 0.001). But mean OSCE scores of motor-sensory examination and lumbar puncture were significantly higher in the fourth-year group as compared with the third-year group (P < 0.05). There were no significant differences in mean OSCE scores of blood culture between the two groups (Table 2).

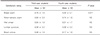

There were no significant differences in the satisfaction ratings, except for those of the breast examination station, between the two groups (Table 3). In both the third-year group and the fourth-year group, there was a moderate degree of correlation between satisfaction ratings and OSCE scores of the motor-sensory examination, the wet smear and the lumbar puncture stations (Table 4).

There were no significant differences in the ratings of satisfaction, confidence, and preparedness between the two groups (Table 5). Pearson's correlation coefficient between the satisfaction with the new curriculum and confidence was 0.673 (P < 0.001) in the third-year group. In addition, the Pearson's correlation coefficient between satisfaction with the 1-year clinical course and confidence was 0.692 (P < 0.001) in the fourth-year group. Total OSCE scores had no significant correlation with satisfaction (r = −0.031) and preparedness (r = −0.100). This was also true for the third-year group, where the correlation between total OSCE scores confidence was not statistical significance (r = 0.162, P = 0.106) (Table 5). In the fourth-year group, total OSCE scores had a small correlation with satisfaction (r = 0.136) and confidence (r = 0.137) but no significant correlation with preparedness (r = −0.138). Furthermore, there was a moderate degree of correlation between satisfaction and preparedness in both groups (Table 5).

There are several limitations of the current study. First, twenty five third-year students did not respond to the self-report questionnaire. But the mean OSCE scores showed no significant differences for these students. Second, we did not analyze the correlation between clinical knowledge based on the results of written test and performances. There is still a controversy as to the correlation between clinical knowledge and performances.567 Third, we only compared the procedural skills between the two groups.

Clinicians must minimize medical errors and there is a worldwide consensus that medical student graduates must attain competence in critical skills that is demonstrable, measurable and transparent.8 Competence can best be achieved by repeated practice with a corrective feedback.9 Thus, medical students can learn clinical skills in general practice by attending clinics and seminars in hospital.10 Our results showed that the mean OSCE scores of motor-sensory examination and lumbar puncture stations were significantly higher in the fourth-year group as compared with the third-year group. The fourth-year group did clinical experiences during third year for twenty weeks including internal medicine for ten weeks, pediatrics, obstetrics-gynecology, general surgery, and neuropsychiatry for five weeks each, and neurology, diagnostic laboratory, emergency medicine and so on. Therefore, the fourth-year group could repeat practice in suture, incision and drainage, lumbar puncture, and motor-sensory exam during the rotation but not in other section of OSCE. Thus, the mean OSCE scores of the section fourth-year group practiced repeatedly showed significantly higher than the third-year group, and mean OSCE scores of breast examination and wet smear were significantly lower in the forth-year group because they did not repeat the practice for 1 year. This suggests that repeated intensional training of clinical skills is helpful for improving medical students' performance. Moreover, medical students should also participate in an education program where their clinical skills can be improved during bedside teaching.

The OSCE is measurement method for evaluating the clinical performance of medical students. It has been reported that there is a poor relationship between OSCE performance and students' clinical experiences.1112 It has also been described that the OSCE was not effective in improving the competency in some specific clinical skills.1314 To date, therefore, new curricula or methods have been developed to improve the degree of clinical skills in medical students and these include general practice attachment,4 the integration of peer-assisted learning into the undergraduate medical curriculum,15 the implementation of the OSCE for the education of undergraduate students16 and OSCE programs combined with review and reflection by faculty member.17

Like the above new curricula or methods, our results demonstrated the effectiveness of intensive small group teaching in increasing the degree of clinical performance in medical students. The mean OSCE scores of breast examination and wet smear were significantly higher in the third-year group, despite a lack of clinical experience, as compared with the fourth-year group. Moreover, there were no significant differences in the mean OSCE scores of blood culture between the two groups. These results suggest that the successful results of the OSCE are dependent on both self-directed learning of clinical skills and the methods of delivering them to medical students during the clinical education process.

Competency-based medical education is currently under development and it aims to provide a clear description of intended outcomes.18 Our curriculum had the intended learning outcome: the attainment of competency in key clinical skills. There are many influential factors such as the passage of time, the student's willingness to learn or their participation in specific educational events in improving their clinical skills. Moreover, the teacher's preparation is also a key factor. Although most physicians would like to teach medical students, there is little time to teach due to contractual obligations such as clinics and research.19 We successfully established our clinical curriculum with the help of many faculty members in our hospital because they gladly observed students' performance and gave them a feedback, even though they had to change their schedules while participating in new curriculum. Our new curriculum includes faculty-assisted learning and direct feedback for students' performance of clinical skills.

The education program provides medical students and residents with increased confidence in their abilities.20 The resulting self-efficacy has an indirect effect on the degree of clinical performance based on the preparedness and anxiety in medical students.5 According to the self-efficacy theory, there might be a significant positive correlation between the self-efficacy for the clinical performance and its actual level in various specialty areas.20 Moreover, self-efficacy is associated with the anxiety and preparedness both directly and indirectly on the OSCE.420 It would remain problematic, however, if medical students attempt to achieve a self-efficacy based on the clinical experience despite a lack of actual clinical competence. Our results showed that clinical experience may be associated with a confidence in the clinical performance and preparedness for the OSCE. However, there was a poor correlation between the confidence of fourth-year medical students and their mean OSCE scores.

In the current study, the mean OSCE scores were significantly lower in the fourth-year group as compared with the third-year group or there were no significant differences in them between the two groups. But all the fourth-year medical students, enrolled in the current study, passed the clinical skill test on the KMLE in January of 2010. Presumably, this might be due to a great desire for the knowledge, the increased confidence in their abilities and the introduction of new curriculum with clinical course. With the introduction of clinical skill test on the KMLE, there are positive changes in the clinical curriculum from both viewpoints of medical students and faculties.

In conclusion, our results showed that the new curriculum based on the faculty-directed intensive small group teaching was effective in improving the degree of clinical performance in medical students. Of course, both students' desire for the knowledge and teachers' positive teaching attitude would contribute to improving the degree of clinical performance. This suggests that the effects of medical education would be increased when it combines intensive small group teaching of clinical skills with a repeated practice of clinical skills.

Figures and Tables

Table 3

Comparison of satisfaction ratings, perception of confidence and preparedness between the two groups

Acknowledgement

We thank all the professors who participated in the new curriculum and the “Medical Educational Scholars Program” at Department of Medical Education, University of Michigan, School of Medicine, USA.

References

1. Lee YS. OSCE for the Medical Licensing Examination in Korea. Kaohsiung J Med Sci. 2008; 24:646–650.

2. Park H. Clinical Skills Assessment in Korean Medical Licensing Examination. Korean J Med Educ. 2008; 20:309–312.

3. O'Connor HM, McGraw RC. Clinical skills training developing objective assessment instruments. Med Educ. 1997; 31:359–363.

4. Townsend AH, Mcllvenny S, Miller CJ, Dunn EV. The use of an objective structured clinical examination (OSCE) for formative and summative assessment in a general practice clinical attachment and its relationship to final medical school examination performance. Med Educ. 2001; 35:841–846.

5. Park KH, Chung WJ, Hong D, Lee WK, Shin EK. Relationship between the clinical performance examination and associated variables. Korean J Med Educ. 2009; 21:269–277.

6. Mavis BE. Does studying for an objective structured clinical examination make a difference. Med Educ. 2000; 34:808–812.

7. Simon SR, Bui A, Day S, Berti D, Volkan K. The relationship between second-year medical students' OSCE scores and USMLE Step 2 scores. J Eval Clin Pract. 2007; 13:901–905.

8. General Medical Council. Tomorrow's Doctors: Recommendations on Undergraduate Medical Education. London: GMC;2002.

9. Carroll WR, Bandura A. Representational guidance of action production in observational learning: a causal analysis. J Mot Behav. 1990; 22:85–97.

10. Murray E, Jolly B, Modell M. Can students learn clinical method in general practice? A randomized crossover trial based on objective structured clinical examinations. BMJ. 1997; 315:920–923.

11. Choi EJ, Sunwoo S. Correlations of clinical assessment tools with written examinations. Korean J Med Educ. 2009; 21:43–52.

12. Park WB, Lee SA, Kim EA, Kim YS, Kim SW, Shin JS, et al. Correlation of CPX scores with the scores of the clinical clerkship assessments and written examinations. Korean J Med Educ. 2005; 17:297–303.

13. Jolly BC, Jones A, Dacre JE, Elzubeir M, Kopelman P, Hitman G. Relationships between students' clinical experiences in introductory clinical courses and their performances on an objective structured clinical examination (OSCE). Acad Med. 1996; 71:909–916.

14. Burke J, Field M. Medical education and training in rheumatologists. In : Madhok R, Capell H, editors. The year in rheumatic diseases. Oxford: Clinical Publishing Services;2007.

15. Perry ME, Burke JM, Friel L, Field M. Can training in musculoskeletal examination skills be effectively delivered by undergraduate students as part of the standard curriculum? Rheumatology (Oxford). 2010; 49:1756–1761.

16. Schoonheim-Klein ME, Habets LL, Aartman IH, Vleuten CP, Hoogstraten J, Velden U. Implementing an objective structured clinical examination (OSCE) in dental education: effects on students' learning strategies. Eur J Dent Educ. 2006; 10:226–235.

17. White CB, Ross PT, Gruppen LD. Remediating students' failed OSCE performances at one school: The effects of self-assessment, reflection, and feedback. Acad Med. 2009; 84:651–654.

18. Harris P, Snell L, Talbot M, Harden RM. Competency-based medical education: implications for undergraduate programs. Med Teach. 2010; 32:646–650.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download