Abstract

Objectives

Both the valence and arousal components of affect are important considerations when managing mental healthcare because they are associated with affective and physiological responses. Research on arousal and valence analysis, which uses images, texts, and physiological signals that employ deep learning, is actively underway; research investigating how to improve the recognition rate is needed. The goal of this research was to design a deep learning framework and model to classify arousal and valence, indicating positive and negative degrees of emotion as high or low.

Methods

The proposed arousal and valence classification model to analyze the affective state was tested using data from 40 channels provided by a dataset for emotion analysis using electrocardiography (EEG), physiological, and video signals (the DEAP dataset). Experiments were based on 10 selected featured central and peripheral nervous system data points, using long short-term memory (LSTM) as a deep learning method.

Results

The arousal and valence were classified and visualized on a two-dimensional coordinate plane. Profiles were designed depending on the number of hidden layers, nodes, and hyperparameters according to the error rate. The experimental results show an arousal and valence classification model accuracy of 74.65 and 78%, respectively. The proposed model performed better than previous other models.

Conclusions

The proposed model appears to be effective in analyzing arousal and valence; specifically, it is expected that affective analysis using physiological signals based on LSTM will be possible without manual feature extraction. In a future study, the classification model will be adopted in mental healthcare management systems.

Recent advances in artificial intelligence technologies, such as deep learning, have resulted in the rapid development of the field, and hence in new research opportunities [12]. While the support of stable mental healthcare needs to be considered, the focus is on automated mental state detection, which is a complex phenomenon with both affective and physiological responses. Although regulation of emotion is a difficult concept, arousal and valence recognition studies have been undertaken to provide an understanding of affective experiences using physiological signals, such as such as electrocardiography (ECG), photoplethysmography (PPG), and electroencephalography (EEG) [345]. In addition, some studies about early mental stress detection using physiological signals have been performed in the mental healthcare domain [6789]. On the other hand, with significant advances in machine learning technologies, such as deep learning, artificial intelligence methods can be applied to improve the efficiency of emotion recognition. Recently, deep learning methods have been applied in the processing of physiological signals, such as EEG and skin resistance. The results were comparable to those of conventional methods [101112]. In this study, a dataset for emotion analysis obtained from an EEG, physiological, and video signals database (DEAP) was used to conduct an emotion classification experiment to validate the efficacy of the deep learning-based approach. The database is the largest, most comprehensive physiological signal emotion dataset publicly available. The goal of this study was to design an LTSM-based emotion classification model using EEG, galvanic skin response (GSR), and PPG signal data that can classify arousal (which indicates strength of emotion) and valence (which indicates positive and negative degree of emotion) as high or low. The DEAP dataset provides data for 10 channels—namely, for 8 EEG channels as well as single channels of GSR and PPG—to analyze emotional states. Then the accuracy of the emotion analysis presented in this paper is assessed by comparing it with the previous studies of Wang and Shang [11], which classifies emotions based on the deep belief network (DBN), which conducts learning by probabilistic judgment using signals of four channels as input data in the DEAP dataset from the central nervous system.

The remainder of this paper is organized as follows. In Section II, a brief overview of both emotion recognition and deep learning for emotion recognition is provided. The experimental design aspects of long short-term memory (LSTM)-based emotion recognition using physiological signals are discussed in Section II. The simulated and experimental schemes and their results are described in Section III. Finally, the conclusions are summarized in Section IV.

As previously mentioned, methods for emotion detection using physiological signals have been extensively investigated and have provided encouraging results where the affective states are directly related to changes in bodily signals [1314]. For the central nervous system (CNS), EEG is a useful technique to study emotion variance. The brain's response to various stimuli is usually measured by dividing the EEG signals into different frequency rhythms, namely, delta (0.5–4 Hz), theta (4–8 Hz), beta (16–32 Hz), and gamma (32 Hz and above). These band waves are omnipresent in various parts of the brain [15]. In addition, among peripheral systems, autonomic nervous system (ANS) activity is considered a major component of an emotional response because the physiological signals based on ANS activity are very descriptive and easy to measure [131415]. Pulse waves result from periodic pulsations in the blood volume, and are measured by the changes in optical absorption that they induce [16171819]. Changes in the amplitudes of PPG signals are related to the level of tension in an individual. GSR signals can be an indicator of the autonomic activity of physiological arousal, which varies with the moisture level of the skin.

Emotion refers to the human being's complex emotional state that is changed by external factors [2021]. Russell [22] classified human emotions into two dimensions: arousal represents the strength of emotions in the degree of arousal and relaxation, while valence represents the degree of positivity and negativity.

Among the many emotion models, we adopted Russell's model [22], where the two dimensions are represented by a vertical arousal and a horizontal valence axes.

Deep learning in neural networks comprises decomposition of networks into multiple layers of processing with the aim of learning multiple levels of abstraction [5]. LSTM is one of the deformation models of an RNN that is used to overcome its long-term dependency problem. LSTM is particularly useful for sequential data, such as time series data [23]. LSTM solves the long-term dependency by installing three gates, which are used for input and output of the memory space; these are the input gate, forget gate, and output gate. Each of the three gates determines how much of the input value will be reflected, how much of the current value is to be forgotten, and whether or not to display the calculation up to present [24].

This section describes the data used in the experiment, the model, and the experimental method.

The first step in mapping physiological signals to emotions is to extract some features from raw signal data—for instance, analyzing the heart rate variability (HRV), which is equivalent to the response of the nervous system of the physiological signal with R-R intervals. These features are usually hand-engineered using task-dependent techniques developed by domain experts [13252627] and selected by experts or feature selection algorithms. However, in this study, we overcame these disadvantages by adopting the LSTM model, which allows automatic feature selection through the learning and training process.

DEAP is a multimodal dataset for the analysis of human affective states [12]. The EEG and peripheral physiological signals (down sampled to 128 Hz), including the horizontal electrooculogram (hEOG), vertical electrooculogram (vEOG), zygomaticus major electromyogram (zEMG), trapezius major electromyogram (tEMG), GSR, respiration belt data, plethysmogram, and body temperature, of 32 subjects were recorded as each subject watched 40 one-minute long videos. The subjects rated the levels as continuous values of arousal, valence, liking, dominance, and familiarity. The structure of the DEAP dataset is shown in Table 1 and Figure 1. Figure 1 shows a small part of the DEAP dataset used as the input data. Also, Figure 1 shows the input data consisting of 10 channels' physiological signals.

As a reference [1314], we selected a PPG signal, a GSR signal, and 8-channel EEG signals of the frontal lobe (Fp1, Fp2, F3, F4) that are responsible for the high level of cognitive, emotional, and mental functions; temporal lobes (T7, T8) responsible for the auditory area; and the occipital lobes (P3, P4) responsible for the auditory area of the 32-channel EEG signals. Only data with a length of 60 seconds (128 Hz × 60), excluding the initial 3 seconds (128 Hz × 3) corresponding to the baseline of the total data length of each signal (128 Hz × 63 seconds), were used in the experiment. For each subject, arousal and valence were evaluated in successive values from 1 to 9. A result of less than 5 was set to 0, while a result greater than or equal to 5 was set to 1 and used as output data (labels). The input and output data were stored as separate files, each of which was divided into 40 files for each participant. The total number of files was 1,280 (participant × video). Of the 1,280 experimental data files, 1,024 files (representing 80% of the total data, from the 1st to the 32nd participants' 40-channel signal files) were used to train the model, and 256 files (representing 20% of the data, from the 33rd to the 40th participants' 40-channel signal files) were used to evaluate the performance of the model.

The structure of the proposed LSTM-based emotion classification model using the central nervous system signals and autonomic nervous system signal is shown in Figure 2. In the hidden layer of Figure 2, x0, x1,…, xt represent the 8 EEG signals of the central nervous system, the autonomic nervous system GSR signal, and the PPG signal, which are physiological signals of 10 channels used as input data. The state values h0, h1,…, ht of the hidden layer, determined from the input data, are those in which the result of the previous signal affects the current signal. In addition, a forget gate (·) was installed between the hidden layer nodes to determine how much of the current value should be forgotten. The output value y is communicated only once at the end, since the degrees of arousal and valence are classified into two categories of low and high. Therefore, weights W, V and U are calculated for each physiological signal with sequence and output values of low or high that are generated. The output data of the proposed emotion classification model was the result of evaluating the degree of arousal and valence between 1 and 9 in the DEAP dataset. Since the performance of the model depends on the numbers of layers and nodes and the hyperparameters, we evaluated its accuracy by varying each parameter. Accuracy is a measure of how precise the model is based on the evaluation criteria. The ratio of prediction—true positive (TP) and true negative (TN)—is expressed as accuracy (%). We tried to improve the performance of the proposed emotion classification model. The experiments were conducted using Deeplearning4j written for Java and Scala in a Window 10 environment. We installed CUDA 8.0 to use the GPU, and managed the project library by installing the build automation tool Apache Maven [27].

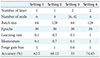

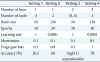

The accuracy results of the arousal and valence classification models, according to the hyperparameter setting values applied to the LSTM, are shown in Tables 1 and 2, respectively. We modified the hyperparameter more than 30 times based on the setup method that can give the best learning result. Thus, only the top four results from each hyperparameter are shown in Tables 2 and 3.

Settings 1, 2 and 3 in Table 2 show the process of setting the hyperparameter value of the arousal classification model, and the numbers of layers and nodes. Settings 3 and 4 are part of the process of setting the hyperparameters with a fixed number of layers and nodes. The accuracy of the model differs according to the value of the forget gate bias. If the learning rate is too low, the speed of the algorithm is slowed down, and the learning cannot be completed before the point at which the cost function is minimized. On the other hand, if the learning rate is too high, overshooting can occur without the minimum value being reached [28].

Settings 1, 2 and 3 in Table 3 show the process of setting the hyperparameter value of the valence classification model, and the numbers of layers and nodes. Settings 3 and 4 are part of the process of setting the hyperparameter with a fixed number of layers. As the result of Setting 3, if the neural network becomes larger, the number of parameters increases. Thus, the performance of the algorithm may be lowered. Therefore, Setting 3 is not predictable when the degree of valence is high (1).

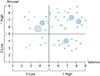

We classified the values of arousal and valence through the proposed LSTM model as low (0) and high (1). Thereafter, we arranged the results of the physiological data as the values of arousal and valence between 1 and 9. Figure 3 shows the distribution of the classification results using a two-dimensional coordinate plane with the arousal and valence axes. In Figure 3, darker color of the circles represents greater density of arousal or valence data in coordinates. Larger size of the circles indicates that accumulation of frequency of arousal or valence data.

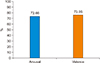

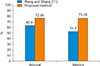

Figure 4 shows the accuracy of the arousal classification model and the valence classification model; the LSTM-based valence classification model using physiological signals showed 78% accuracy. In the experiment, the proposed arousal classification model had 2 layers, 4 nodes, 128 batch sizes, and a learning rate, momentum, and forget gate bias of 1, 1, and 1, respectively. The number of iterations was set to 8. As a result, the LSTM-based arousal classification model using physiological signals showed 74.65% accuracy. In addition, the proposed valence classification model had 2 layers, 2 nodes, 128 batch sizes, and a learning rate, momentum, and forget gate bias of 0.0001, 0.1, and 1, respectively. The number of iterations was set to 7. As a result, the LSTM-based valence classification model using physiological signals showed 78% accuracy. Figure 5 compares the accuracy of the model developed in this study to that of Wang and Shang [11]. As shown in Figure 5, although emotion classification is very personal and variable, in comparison with the results of other studies, each of the results is more than 20% better than those of previous studies.

In this paper, we proposed an arousal and valence classification model based on LSTM using physiological signals obtained from the DEAP dataset for mental healthcare management. The performance of the emotion classification model depends on the number of layers, nodes, and hyperparameters. If the neural network becomes too large, it is important to find the appropriate number of layers and nodes due to the increase in the number of parameters.

Although, there were some limitations of the study, such as the lack of comparison with other classification methods, such as non-deep learning technique classification models, including naïve Bayesian, decision tress, and support vector machine and so on. To improve the accuracy of the arousal and valence status classification results, more systematic profile generation including hyperparameter settings will be performed in a future study.

When the optimized parameters are set by these criteria, the proposed arousal and valence classification models based on LSTM achieve 74.65% and 78% accuracy, respectively. In addition, the performance of the proposed emotion classification model proposed is compared with the performance of the emotion classification model proposed by Wang and Shang [11], who classified the degree of arousal, valence, and liking based on DBN by using autonomic nervous system signals (hEOG, vEOG, zEMG, tEMG) of four channels as input data from the DEAP dataset. In that work, the classification accuracies for arousal, valence, and liking were 60.9%, 51.2%, and 68.4%, respectively. In this paper, only the degrees of arousal and valence were classified; therefore, only the classification accuracy of arousal and valence were compared. Practically, the results of the LSTM model proposed in this paper achieved higher accuracy for arousal (1.99%) and valence (4.95%) than those of a previous study by Song [29], in which the arousal accuracy was 72.66%, and the valence accuracy was 73.05%.

Thus, we confirmed that the LSTM-based emotion classification model using the central nervous system and autonomic nervous system signals is more suitable for classifying emotions than the DBN-based emotion classification model using autonomic nervous system signals. Those results indicate that the designed classification model has tremendous potential to facilitate and enhance personal mental healthcare if it is adopted in mental health management systems, such as mobile or web-based applications. It can support individuals managing their own mental health, and it can be used in clinical care systems to enhance existing treatment processes, such as medical examination through interviews.

In this paper, we proposed an emotion classification model based on LSTM using central and autonomic nervous system signals obtained from the DEAP dataset.

It has been confirmed that, according to the situation or purpose, emotion classification is possible based on the LSTM, which extracts features from the time series data by itself using the central and autonomic nervous system signals. The model can quantitatively measure and analyze emotion without reflecting subjective judgment. Therefore, it is expected that emotion analysis using physiological signals based on LSTM will be possible without processing. In a future study, the classification model will be adopted in mental healthcare management systems.

Figures and Tables

Acknowledgments

This work was supported by the Industrial Strategic Technology Development Program (No. 10073159, Developing mirroring expression based interactive robot technique by noncontact sensing and recognizing human intrinsic parameter for emotion healing through heart-body feedback) funded by the Ministry of Trade, Industry & Energy, Korea. This research was also supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. 2017R1D-1A1B03035606).

References

1. Salamon N, Grimm JM, Horack JM, Newton EK. Application of virtual reality for crew mental health in extended-duration space missions. Acta Astronaut. 2018; 146:117–122.

2. Valmaggia LR, Latif L, Kempton MJ, Rus-Calafell M. Virtual reality in the psychological treatment for mental health problems: an systematic review of recent evidence. Psychiatry Res. 2016; 236:189–195.

3. Faust O, Hagiwara Y, Hong TJ, Lih OS, Acharya UR. Deep learning for healthcare applications based on physiological signals: a review. Comput Methods Programs Biomed. 2018; 161:1–13.

4. Zhang Q, Chen X, Zhan Q, Yang T, Xia A. Respiration-based emotion recognition with deep learning. Comput Ind. 2017; 92-93:84–90.

5. Yin Z, Zhao M, Wang Y, Yang J, Zhang J. Recognition of emotions using multimodal physiological signals and an ensemble deep learning model. Comput Methods Programs Biomed. 2017; 140:93–110.

6. Haeyen S, van Hooren S, van der Veld WM, Hutschemaekers G. Promoting mental health versus reducing mental illness in art therapy with patients with personality disorders: a quantitative study. Arts Psychother. 2018; 58:11–16.

7. Zhang L, Kong M, Li Z. Emotion regulation difficulties and moral judgement in different domains: the mediation of emotional valence and arousal. Pers Individ Dif. 2017; 109:56–60.

8. Healey J, Picard RW. Detecting stress during real-world driving tasks using physiological sensors. IEEE trans Intell Transp Syst. 2005; 6(2):156–166.

9. Xia L, Malik AS, Subhani AR. A physiological signal-based method for early mental-stress detection. Biomed Signal Process Control. 2018; 46:18–32.

10. Lee D, Kim JH, Jung WH, Lee HJ, Lee SG. The study on EEG based emotion recognition using the EMD and FFT. In : Proceedings of the HCI Society of Korea; 2013 Jan 30-Feb 1; Jeongseon, Korea. p. 127–130.

11. Wang D, Shang Y. Modeling physiological data with deep belief networks. Int J Inf Educ Technol. 2013; 3(5):505–511.

12. DEAPdataset: a dataset for emotion analysis using EEG, physiological and video signal [Internet]. London: Queen Mary University of London;c2017. cited at 2018 Oct 1. Available from: http://www.eecs.qmul.ac.uk/mmv/datasets/deap.

13. Kim J, Andre E. Emotion recognition based on physiological changes in music listening. IEEE Trans Pattern Anal Mach Intell. 2008; 30(12):2067–2083.

14. Kreibig SD. Autonomic nervous system activity in emotion: a review. Biol Psychol. 2010; 84(3):394–421.

15. Collet C, Vernet-Maury E, Delhomme G, Dittmar A. Autonomic nervous system response patterns specificity to basic emotions. J Auton Nerv Syst. 1997; 62(1-2):45–57.

16. Ryoo DW, Kim YS, Lee JW. Wearable systems for service based on physiological signals. Conf Proc IEEE Eng Med Biol Soc. 2005; 3:2437–2440.

17. Jerritta S, Murugappan M, Nagarajan R, Wan K. Physiological signals based human emotion recognition: a review. In : Proceedings of 2011 IEEE 7th International Colloquium on Signal Processing and its Applications (CSPA); 2001 Mar 4-6; Penang, Malaysia. p. 410–415.

18. Chang CY, Zheng JY, Wang CJ. Based on support vector regression for emotion recognition using physiological signals. In : Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN); 2010 Jul 18-23; Barcelona, Spain. p. 1–7.

19. Kim JH, Whang MC, Kim YJ, Woo JC. The study on emotion recognition by time dependent parameters of autonomic nervous response. Korean J Sci Emot Sensibility. 2008; 11:637–644.

20. Vogt T, Andre E. Improving automatic emotion recognition from speech via gender differentiation. In : Proceedings of Language Resources and Evaluation Conference (LREC); 2006 May 24-26; Genoa, Italy.

21. Mill A, Allik J, Realo A, Valk R. Age-related differences in emotion recognition ability: a cross-sectional study. Emotion. 2009; 9(5):619–630.

23. Kwon SJ. Sentiment analysis of movie reviews using the Word2vec and RNN [Master's thesis]. Seoul, Korea: Dongguk University;2009.

24. TensorFlow. Recurrent neural network [Internet]. [place unknown]: TensorFlow;c2018. cited at 2018 Oct 1. Available from: https://www.tensorflow.org/tutorials/sequences/recurrent.

25. Chanel G, Kierkels JJ, Soleymani M, Pun T. Short-term emotion assessment in a recall paradigm. Int J Hum Comput Stud. 2009; 67(8):607–627.

26. Rainville P, Bechara A, Naqvi N, Damasio AR. Basic emotions are associated with distinct patterns of cardiorespiratory activity. Int J Psychophysiol. 2006; 61(1):5–18.

27. Deeplearning4j [Internet]. Ottawa, Canada: Eclipse Foundation;c2018. cited at 2018 Oct 1. Available from https://deeplearning4j.org/about.

28. Machine learning: learning rate, data processing, overfitting [Internet]. place unknown: publisher unknown;c2017. cited at 2018 Oct 1. Available from http://copycode.tistory.com/166.

29. Song SH. The emotion analysis based on long short term memory using the central and autonomic nervous system signals [Master's thesis]. Seoul, Korea: Sangmyung University;2018.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download