Abstract

Purpose

To analyze the interobserver variability of radiologists in their description and final assessment categories of microcalcifications in digital magnification mammographies.

Materials and Methods

From 2005 to 2006, five radiologists analyzed 66 lesion microcalcifications in 65 patients on digital magnification mammographies using a blind method and including 40 benign and 26 malignant lesions. Each observer evaluated the microcalfication morphology, distribution, and BIRADS® category. Using the kappa value, the degree of interobserver agreement was calculated and the rate of malignancy was assessed.

Results

The mean kappa value for microcalcification morphology was 0.19, which was considered to be moderate agreement for the microcalcification distribution (k: 0.54). The overall rate of malignancy was 39% for microcalcification morphology and distribution. Among them, amorphous microcalcifications showed the lowest rate of malignancy (17%). The mean kappa value for the final assessment categories of BI-RADS® was 0.29 and the mean rate of malignancy was 39%.

Conclusion

Although there was slight interobserver variability, according to each of the descriptors, the general interobserver agreement in interpretation of microcalcification on digital magnification mammogram was slight to moderate. To improve interobserver agreement for the interpretation of microcalcifications, proper image quality control, standardization of criteria, and proper training of radiologists are needed.

Figures and Tables

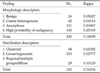

Table 2

Diagnostic Evaluation and Correlation with Final Assessment Categorization of Microcalcification

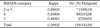

Table 4

Results of Published Series of Interobserver Variability on Mammogram

Note.─ * 13 findings including lobulated mass, asymmetric breast parenchyma, nipple retraction, skin thickening, axillary lymph node, speculated mass, indistinct marginated mass, cooper's ligament thickening, intramammary lymph node, duct ectasia, architectural distortion, stellate mass

†8-12 cases in the mass

‡NA: not assessable

References

1. American College of Radiology. Breast imaging reporting and data system, breast imaging atlas. 4th ed. Reston, VA: American College of Radiology;2003.

2. Sickles EA, Doi K, Genant HK. Magnification film mammography: image quality and clinical studies. Radiology. 1977; 125:69–76.

3. Sickles EA. Further experience with microfocal spot magnification mammography in the assessment of clustered breast microcalcifications. Radiology. 1980; 137:9–14.

4. Obenauer S, Luftner-Nagel S, von Heyden D, Munzel U, Baum F, Grabbe E. Screen film vs full-field digital mammography: image quality, detectability and characterization of lesions. Eur Radiol. 2002; 12:1697–1702.

5. Fischer U, Baum F, Obenauer S, Luftner-Nagel S, von Heyden D, Vosshenrich R, et al. Comparative study in patients with microcalcifications: full-field digital mammography vs screen-film mammography. Eur Radiol. 2002; 12:2679–2683.

6. Fischer U, Hermann KP, Baum F. Digital mammography: current state and future aspects. Eur Radiol. 2006; 16:38–44.

7. Cho SY, Choi CS, Kim HC, Choi MH, Kim EA, Bae SH, et al. Interobserver Variation in Interpretation of Mammograms : Focused on Findings Suggestive of Malignancy. J Korean Radiol Soc. 1996; 34:133–137.

8. Jin GY, Han YM, Lim YS, Jang KY, Lee SY, Chung GH. Percutaneous Radiofrequency Thermal Ablation of Lung VX2 Tumors in a Rabbit Model: Evaluation with Helical CT Findings for the Complete and Partal Ablation. J Korean Radiol Soc. 2004; 51:351–356.

9. Lazarus E, Mainiero MB, Schepps B, Koelliker SL, Livingston LS. BI-RADS lexicon for US and mammography: interobserver variability and positive predictive value. Radiology. 2006; 239:385–391.

10. Berg WA, Campassi C, Langenberg P, Sexton MJ. Breast Imaging Reporting and Data System: inter- and intraobserver variability in feature analysis and final assessment. AJR Am J Roentgenol. 2000; 174:1769–1777.

11. Cosar ZS, Cetin M, Tepe TK, Cetin R, Zarali AC. Concordance of mammographic classifications of microcalcifications in breast cancer diagnosis: utility of the breast imaging reporting and data system (fourth edition). Clin Imaging. 2005; 29:389–395.

12. Fleiss JL. Statistical methods for rates and proportions. 2nd ed. New York: John Wiley & Sons Inc;1981.

13. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977; 33:159–174.

14. Feig SA, Galkin BM, Muir HD. Evaluation of breast microcalcifications by means of optically magnified tissue specimen radiographs. Recent Results Cancer Res. 1987; 105:111–123.

15. Sickles EA. Mammographic features of 300 consecutive nonpalpable breast cancers. AJR Am J Roentgenol. 1986; 146:661–663.

16. Hermann KP, Obenauer S, Funke M, Grabbe EH. Magnification mammography: a comparison of full-field digital mammography and screen-film mammography for the detection of simulated small masses and microcalcifications. Eur Radiol. 2002; 12:2188–2191.

17. Orel SG, Kay N, Reynolds C, Sullivan DC. BI-RADS categorization as a predictor of malignancy. Radiology. 1999; 211:845–850.

18. Bent CK, Bassett LW, D’Orsi CJ, Sayre JW. The positive predictive value of BI-RADS microcalcification descriptors and final assessment categories. AJR Am J Roentgenol. 2010; 194:1378–1383.

19. Jiang Y, Nishikawa RM, Schmidt RA, Toledano AY, Doi K. Potential of computer-aided diagnosis to reduce variability in radiologists’ interpretations of mammograms depicting microcalcifications. Radiology. 2001; 220:787–794.

20. Berg WA, D’Orsi CJ, Jackson VP, Bassett LW, Beam CA, Lewis RS, et al. Does training in the Breast Imaging Reporting and Data System (BI-RADS) improve biopsy recommendations or feature analysis agreement with experienced breast imagers at mammography. Radiology. 2002; 224:871–880.

21. Blank RG, Wallis MG, Given-Wilson RM. Observer variability in cancer detection during routine repeat (incident) mammographic screening in a study of two versus one view mammography. J Med Screen. 1999; 6:152–158.

22. Ciccone G, Vineis P, Frigerio A, Segnan N. Inter-observer and intra-observer variability of mammogram interpretation: a field study. Eur J Cancer. 1992; 28A:1054–1058.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download