Abstract

Objectives

This research was conducted to identify both the users' service requirements on health information websites (HIWs) and the key functional elements for running HIWs. With the quality function deployment framework, the derived service attributes (SAs) are mapped into the suppliers' functional characteristics (FCs) to derive the most critical FCs for the users' satisfaction.

Methods

Using the survey data from 228 respondents, the SAs, FCs and their relationships were analyzed using various multivariate statistical methods such as principal component factor analysis, discriminant analysis, correlation analysis, etc. Simple and compound FC priorities were derived by matrix calculation.

Results

Nine factors of SAs and five key features of FCs were identified, and these served as the basis for the house of quality model. Based on the compound FC priorities, the functional elements pertaining to security and privacy, and usage support should receive top priority in the course of enhancing HIWs.

Conclusions

The quality function deployment framework can improve the FCs of the HIWs in an effective, structured manner, and it can also be utilized for critical success factors together with their strategic implications for enhancing the service quality of HIWs. Therefore, website managers could efficiently improve website operations by considering this study's results.

With the living standard rising and medical technology advancing, the public concerns about health care are shifting from disease treatment to health promotion and preventive medicine. People are seeking medical experts for health information and requesting a wide range of medical knowledge [1]. Accordingly, supplier-centered health care systems are rapidly turning into user-oriented ones. Adapting to such a changing environment, the internet has emerged as a great information source since it provides an easy access to a variety of health information that was not available to the public in the past. As a result, health information websites (HIWs) have become the most effective medium facilitating communication in the health care sector and satisfying the public's needs for health information.

Various sources have confirmed that HIWs play a vital role in providing health information to customers. From the health information provider perspective, it has been estimated that there are 20,000 HIWs on the internet offering information, expert advice and even drug prescriptions [2]. On the other hand, from the consumer view, previous studies suggested that more than half and as much as eighty percent of internet users access it for health and medical advice [3-5].The users even felt that the internet influenced their decision-making and improved communications with physicians [6]. Therefore, as an emerging field, e-health offers many exciting prospects, to both the health professionals as well as to the health consumers [7].

Given the unique nature and importance of the services that HIWs provide, HIWs should be able to identify and meet customer needs, thereby providing high-quality health information. However, not many HIWs emphasize quality of information processing and distribution. Moreover, most of these websites are found to fall short of giving due consideration to the service quality from the users' perspective. In fact, trustworthiness of HIWs often comes into question as some HIWs provide misleading hype or information, for which accountability is unclear [8,9].

Under this situation, the need to evaluate and improve services of HIWs is growing. In fact, there have been some programs and guidelines proposed recently to evaluate HIWs for a specific and practical purpose. For example, the third party certification program is for a neutral institution (the third party) to present appropriate quality management criteria for website evaluation. Such a program provides various methodologies and approaches for evaluation of website operators as well as information providers including medical experts.

There are, however, few academic researches on measuring and developing the service quality of those websites. Amongst rare studies on HIW service quality, Chung and Park [10], Kang et al. [8] and Kim et al. [11] suggest some criteria to evaluate services from user's perspective [8,10,11]. For example, Chung and Park [10] lists 8 criteria including intent, relevance, accuracy, trustworthiness, ease of use, authority, backflow and continuity together with 32 other criteria [10]. However, most take the supplier's perspective and focus on information characteristics and technical features required of website operators as shown in such as Department of Health and Human Services [12], and Risk and Petersen [13], barely addressing users' requirements for HIWs.

However, these generic methodologies may fail to delicately capture the industry-specific features. Furthermore, they focus only on demand side, consequently limiting the applicability to provide a clear guidance to website operators. To overcome these limitations, requested is a more balanced approach incorporating both demand side and supply side in the health service industry: that is, functional aspects of service provision in HIWs and an in-depth understanding of service elements that customers deem critical in order to evaluate HIWs.

Therefore, we employ the quality function deployment (QFD) framework to translate customers' requirements into specific service design factors [14-18]. The QFD graphical display, called the house of quality (HoQ), provides a framework of the QFD process. Based on the HoQ model, derived are service attributes (SAs) perceived by website users and functional characteristics (FCs) for website design and operations. Then statistical analyses are conducted to link SAs and FCs, as a way to map users' requirements into suppliers' practices in a structured manner. The final purpose of this paper includes the derivation of critical success factors together with their strategic implications for enhancing the service quality of HIWs.

Proposed here is a HoQ-based framework applied to the HIWs to see how to improve the service quality and what operational functions (FCs) to focus on. HoQ is built upon two principal components: voice of customer (VoC) and voice of engineer (VoE), which are embodied in SAs and FCs, respectively. Furthermore, a matrix, the heart of the HoQ model, is constructed from cause-effect relationships, which could be best described by a mapping from the VoE (FCs) space into the VoC (SAs) space.

Specifically, HoQ begins with the customer in order to fully identify customers' wants. By operationalizing VoC, one constructs SAs so that the overall customer concern can be clearly and effectively represented. VoC is then translated into corresponding FCs, which represents the means by which SAs are responded. Those technical requirements (FCs) are listed at the top of the framework, and each FC may affect one or more SAs. After determining SAs and FCs, the HoQ continues with establishing the relations.

The primary outcome of the HoQ is the FC priorities, which is to be stored at the bottom of the matrix. It can be seen which particular FCs are of importance so that effort could be concentrated on them for effective quality improvement. With prioritizing FCs, one is able to be more responsive to customer needs that SAs surrogate. From now on, we will use the terms VoC and SA inter-changeably. The same applies to VoE and FC.

The question items are categorized into 1) items to assess importance of service quality factors associated with HIW usage, 2) items to measure functional and/or technical features of the websites, and 3) additional items on respondents' demographic characteristics (Table 1). To collect the quantitative data through survey, the potential question items on VoC and VoE should be listed in advance.

Original question items for functional elements were first derived through review and benchmarking of 6 global and 8 domestic HIWs which were carefully chosen based on the previous researches such as Chang et al. [19] and Kim et al. [11]. Then they were organized and refined based on the service flow analysis on those websites. Finally, a series of indepth expert panel discussion removed or elaborated inappropriate and overlapping features or descriptions among those functional element candidates. As a result, 17 question items were determined for the assessment of functional elements.

The extensive survey was conducted to quantify the value of each element constituting the HoQ model by a professional survey institution. The subject population was considered as the adults aged between 18 and 49 because those under the age of 18 or above 49 are deemed to have little experience with using health information websites, and thus not likely to provide reliable responses. This relates to findings in Chang et al. [20], where one sees that over 80% of those in 20s and 65% of 30s access HIWs while those aged 40s or above use other media than the internet to search for health information.

A nationally representative random sample of adults was contacted through an online survey. The sample size was targeted to 250, and the balanced allocation was considered by gender and age groups. Out of 400,000 enrollees registered in the panel pool, 1,000 adults were stratified-sampled by gender and age groups expecting the response rate of 25%. The survey was continued to complete the allocated size of responses by each stratum, as summing up to 250.

Finally, 228 copies of the answered questionnaires without missing data were used for further analysis. Table 1 represents the demographic characteristics of the 228 respondents, of which males and females account for 50%, respectively. Broken down into age groups, those aged 18-29 account for 38.8%, 30-39 for 33.2%, and 40 or above for 28.0%. Broken down by region, those living in large cities account for 60.8%, mid & small cities for 32.8%, and remote area for 6.4%. By occupation, office workers account for 26.0%, house-wives and husbands for 18.4%, students for 17.6%, and professionals for 12.4% while public servants, teachers, service workers, production workers, and technicians represent the remaining proportion. Cronbach-alpha values of all the questions was found to be 0.98 or above, validating the reliability of the survey result.

SAS ver 9.1 (SAS Institute, Cary, NC, USA) was used to conduct statistical analyses. As a preliminary examination, the frequency analysis of the questionnaire was performed in order to see mean and standard deviation of each question and find out important survey items. In the beginning of the HoQ deployment, employed was the principal component factor analysis with orthogonal rotation to construct SAs and FCs. For user priorities in the set of SAs, the discriminant analysis was performed with two separate groups of users: the satisfied vs. the dissatisfied. To figure out the relationships between SAs and FCs, the Pearson correlation coefficients were calculated for each pair of a SA and a FC. Finally, simple and compound FC priorities were computed, respectively.

After this filtering process, nine key factors in SAs were identified with the principal component analysis based on the survey outcome on 53 questions. According to common, representative feature of question items assigned to a group, we name these nine SAs information reliability (IR), information richness (IC), customer care (CC), ease-of-use (EU), recency (RC), security and integrity (SI), publicity (P), responsiveness (RS), and transparency (T) respectively.

Table 2 presents the key statistical characteristics and brief explanations of 9 SAs. For example, IR shows the biggest eigenvalue (4.53), which implies that this conceptual factor explains more than 8.54% of the total variation. IR includes the question items pertaining to 'information proven by medical profession' and 'accuracy of information'. On the other hand, T has the smallest eigenvalue of 2.01, and it is constructed from the question items for the identification of website operator or information provider.

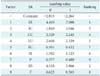

To determine the priority ranks of SAs in terms of user satisfaction, conducted here is again the discriminant analysis. The ranking is determined by the size of the corresponding coefficients of the discriminant function for the satisfied users. Table 3 summarizes the result and SA priority ranking, where IR takes the first position, followed by RS. However, their difference is slight. EU comes next, and CC, IC, and SI follow in the order of priority. Meanwhile, RC, T, and P seem to have the least effect on user satisfaction.

Five FCs are determined by the principal component analysis applied to 17 question items on the functional elements of HIWs. These FCs are then named security and privacy (Sp), usage support (Us), user management (Um), search (Sr), and recommendation (Rm) respectively. Table 4 presents statistical characteristics of each FC with its brief description.

Even though the FC construction procedure through the principal component method results in mutually orthogonal FCs, the interdependences among FCs cannot be completely eliminated. Table 5 presents the Pearson correlation coefficients between two FCs in each pair, which will constitute the roof of the HoQ model.

Presented in Table 6 are the correlations between SAs and FCs, which constitute the main body of the HoQ model. For example, 0.22 in cell (3, 2) of the matrix represents the Pearson correlation coefficient between CC (SA) and Us (FC). All the significant correlations are highlighted in the table; otherwise, they are to be abandoned in the final HoQ matrix. All the columns but one for Um is dense. In particular, columns corresponding to Sp and Us show the greatest density, which implies that these FCs are highly correlated with all or most SAs. On the other hand, Um seems to be directly connected only with IC. The overall effects of this matrix structure on the FC priorities will be discussed in the following sections.

Figure 1 depicts the summary of the HoQ application to the HIWs. SAs together with their priorities in Tables 2 and 3 are put on the both sides of the HoQ model. Each FC from Table 4 defines a column of the HoQ matrix. The correlation values in Table 5 are copied into each corresponding cell in the roof. Finally, Table 6 constitutes the body of the HoQ model.

Two rows at the bottom in Figure 1 present the key outcomes of the HoQ model in this study. The functional group of Sp is identified as the most critical factor, as followed by Us, Sr and Rm, which show similar magnitude of importance. On the other hand, Um seems to be negligible for the purpose of enhancing user satisfaction. Moreover, in terms of the simple priority, Sp dominates the other FCs, and in particular, outweighs Um almost by 13 times.

Observed are, however, some changes in the pattern of the FC priorities when we take the interrelationships among FCs into account. For example, even though the priority order is preserved, due to a great jump in the magnitude of importance of Um, the gap between the most important FC (Sp) and the least (Um) is considerably reduced. That is, the compound priority of Sp is only two times larger than that of Um, the gap in the relative importance is reduced by order of 6.5. Accordingly, the dominance of Sp disappears; instead, Us doubles to the extent that Us is comparable to Sp.

Given the interest in various health and medical information over the internet, there has been a lack of efforts to establish evaluation criteria for measuring the service quality of HIWs in a reliable and effective manner. Comparing with the preceding researches, however, found were more interesting attributes which are attributable to the unique nature of HIWs.

For SAs, factors like RC, P, and T have been hardly considered in the relevant literature, and thus regarded as what differentiates user requirements for HIWs from those for other websites such as online shopping malls. That is, these service attributes reflect users' needs for publicity and professional expertise that HIWs should be equipped with. Users consider not only contents-related feature but also contents delivery method as an important component. It is IR that users think the most important principal component; this fact is also substantiated by existing literature (for example, Jadad and Gagliardi [21], Risk and Petersen [13]).

Identified also were key functional attributes of HIWs designed to find out how they relate to customer requirements. Some existing research also identified key functional elements of HIW (for example, Pealer and Dorman [22] etc.), but they were not a quantitative result derived based on objective data. Such preceding researches identified functional elements from website administrator's point of view, and thus have clear limitations in identifying core functional features to improve website performance by incorporating user requirements. In contrast, FCs identified in this research was constructed to meet customer requirements and constitute an essential building block in the HoQ model.

FC priority - the final outcomes of the HoQ model - was determined by quantifying FCs' contribution to meeting user requirements. This research employed two options to calculate FC priority: the simple FC priority where correlations among FCs are not considered and the compound FC priority that explicitly reflects those correlations. FC priority ranking, however, turned out to be preserved in both simple and compound cases. Given priority ranking, website administrators should focus on managing FCs associated with Sp and Us as they are most effective in meeting user requirements.

However, as seen in the Figure 1, significant changes occur in numeric values of FC priorities. For example, Sp ranks top in both simple and compound methods but its relative importance decreases from 32.3% (simple) to 25.6% (compound). On the other hand, relative importance of Um, which was found to be the least important in the simple case, sharply increases from 2.5% (simple) to 11.6% (compound). These changes stem from the structure of HoQ matrix and roof (triangular) matrix.

Such a gap between simple FC priority and compound FC priority presents useful insight on website management. That is, when discussing an effect of improving certain FCs, considerations should be given to correlation among FCs. Website managers could efficiently improve website operations by considering these synergy effects. In this light, the HoQ framework helps improve FCs of HIWs in an effective, structured manner.

Lastly, we complete the discussion by indicating some limitations of this research. As in most survey-based researches, SAs and their importance to user satisfaction could vary with characteristics of respondents. For example, when basing the survey result only on responses from those in their 20s or 30s who are familiar with the internet, importance of IR is valued higher than in the rest of age groups. Accordingly, it would have been statistically more reasonable if survey items for IR had been subdivided and regrouped to reliability and publicity. These differences represent the necessity of detailed analysis on specific user segments when a HIW is targeting a specific age group.

In conclusion, this research first defined the core criteria (SAs) to evaluate service quality of HIWs through a comprehensive and in-depth study including literature review and user survey. Furthermore, since the research focuses on facilitating functional improvement of the websites, we developed the operations-related FCs. Finally, with the SAs and FCs identified above, we derived the correlation between them using statistical estimation under the HoQ framework, whereby determining key FCs critical to improving the user requirements.

Figures and Tables

Figure 1

Summary of the HoQ model for health information websites.

SA: service attribute, FC: functional characteristic, Sp: security and privacy, Us: usage support, Um: user management, Sr: search, Rm: recommendation, IR: information reliability, IC: information richness, CC: customer care, EC: ease-of-use, RC: recency, SI: security and integrity, P: publicity, RS: responsiveness, T: transparency.

Table 6

House of quality matrix (relationships between service attributes and functional characteristics)

Sp: security and privacy, Us: usage support, Um: user management, Sr: search, Rm: recommendation, IR: information reliability, IC: information richness, CC: customer care, EC: ease-of-use, RC: recency, SI: security and integrity, P: publicity, RS: responsiveness, T: transparency.

Pearson correlation coefficient (p value). *p < 0.05, **p < 0.01, ***p < 0.001.

Acknowledgements

The present work was supported by the 2007 Research Abroad Program for Professors funded by Kyung Hee University.

References

1. Eysenbach G. Consumer health informatics. BMJ. 2000. 320:1713–1716.

2. Dyer KA. Ethical challenges of medicine and health on the internet: a review. J Med Internet Res. 2001. 3:E23.

3. Baker L, Wagner TH, Singer S, Bundorf MK. Use of the internet and e-mail for health care information: results from a national survey. JAMA. 2003. 289:2400–2406.

4. Ybarra ML, Suman M. Help seeking behavior and the internet: a national survey. Int J Med Inform. 2006. 75:29–41.

5. Fox S, editor. Online health search 2006: Most internet users start at a search engine when looking for health information online: very few check the source and date of the information they find [Internet]. 2006. cited 2010 Mar 16. Washington (DC): Pew Internet & American Life Project;Available from:

http://www.pewinternet.org/~/media//Files/Reports/2006/PIP_Online_Health_2006.pdf.pdf.

6. Sillence E, Briggs P, Harris PR, Fishwick L. How do patients evaluate and make use of online health information? Soc Sci Med. 2007. 64:1853–1862.

7. Rodrigues RJ. Ethical and legal issues in interactive health communications: a call for international cooperation. J Med Internet Res. 2000. 2:E8.

8. Kang N, Kim J, Tack G, Hyun T. Criteria for the websites in Korean with health information on the internet. J Korean Soc Med Inform. 1999. 5:119–124.

9. Shin JH, Seo HG, Kim CH, Koh JS, Woo KH. The evaluation of scientific reliability of medical information on WWW in Korea through analyzing hepatitis information. J Korean Soc Med Inform. 2000. 6:73–88.

10. Chung YC, Park HA. Development of a health information evaluation system on the internet. J Korean Soc Med Inform. 2000. 6:53–66.

11. Kim J, Kim E, Ko I, Kang SM. An evaluation study of hypertension information providing web sites on the internet. J Korean Soc Med Inform. 2003. 9:45–52.

12. Centers for Medicare & Medicaid Services. Health insurance reform: security standards. Final rule. Fed Regist. 2003. 68:8334–8381.

13. Risk A, Petersen C. Health information on the internet: quality issues and international initiatives. JAMA. 2002. 287:2713–2715.

14. Franceschini F, Rossetto S. QFD: the problem of comparing technical engineering design requirements. Res Eng Design. 1995. 7:270–278.

15. Franceschini F, Rupil A. Rating scales and prioritization in QFD. Int J Qual Reliab Manag. 1999. 16:85–97.

16. Franceschini F, Rossetto S. Quality function deployment: how to improve its use. Total Qual Manag. 1998. 9:491–500.

17. Hauser JR, Clausing D. The house of quality. Harv Bus Rev. 1988. 66:63–73.

18. Tan KC, Xie M, Chia E. Quality function deployment and its use in designing information technology system. Int J Qual Reliab Manag. 1998. 15:634–645.

19. Chang H, Shim J, Kim Y. Sources of health information by consumer's characteristics. J Korean Soc Med Inform. 2004. 10:415–427.

20. Chang H, Kim D, Shim J. Attributes of user-centered evaluation for health information websites. J Korean Soc Med Inform. 2004. 10:429–440.

21. Jadad A, Gagliardi A. Rating health information on the internet: navigating to knowledge or to Babel? JAMA. 1998. 279:611–614.

22. Pealer LN, Dorman SM. Evaluating health related web sites. J Sch Health. 1997. 67:232–235.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download