1. Macaskill P, Gatsonis C, Deeks JJ, Harbord RM, Takwoingi Y. Chapter 10: Analysing and Presenting Results. In : Deeks JJ, Bossuyt PM, Gatsonis C, editors. The Cochrane Collaboration. Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy Version 1.0. 2010. Available from:

http://srdta.cochrane.org/.

2. Tunis AS, McInnes MD, Hanna R, Esmail K. Association of study quality with completeness of reporting: have completeness of reporting and quality of systematic reviews and meta-analyses in major radiology journals changed since publication of the PRISMA statement? Radiology. 2013; 269:413–426. PMID:

23824992.

3. Irwig L, Tosteson AN, Gatsonis C, Lau J, Colditz G, Chalmers TC, et al. Guidelines for meta-analyses evaluating diagnostic tests. Ann Intern Med. 1994; 120:667–676. PMID:

8135452.

4. Trikalinos TA, Balion CM, Coleman CI, Griffith L, Santaguida PL, Vandermeer B, et al. Chapter 8: meta-analysis of test performance when there is a "gold standard". J Gen Intern Med. 2012; 27(Suppl 1):S56–S66. PMID:

22648676.

5. Lee J, Kim KW, Choi SH, Huh J, Park SH. Systematic review and meta-analysis of studies evaluating diagnostic test accuracy: a practical review for clinical researchers--part ii. statistical methods of meta-analysis. Korean J Radiol. 2015; 16:1188–1196.

6. Jaeschke R, Guyatt GH, Sackett DL. The Evidence-Based Medicine Working Group. Users' guides to the medical literature. III. How to use an article about a diagnostic test. B. What are the results and will they help me in caring for my patients? JAMA. 1994; 271:703–707. PMID:

8309035.

7. Lee YJ, Lee JM, Lee JS, Lee HY, Park BH, Kim YH, et al. Hepatocellular carcinoma: diagnostic performance of multidetector CT and MR imaging-a systematic review and meta-analysis. Radiology. 2015; 275:97–109. PMID:

25559230.

8. Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015; 4:1. PMID:

25554246.

9. Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007; 7:10. PMID:

17302989.

10. Sampson M, Barrowman NJ, Moher D, Klassen TP, Pham B, Platt R, et al. Should meta-analysts search Embase in addition to Medline? J Clin Epidemiol. 2003; 56:943–955. PMID:

14568625.

11. Staunton M. Evidence-based radiology: steps 1 and 2--asking answerable questions and searching for evidence. Radiology. 2007; 242:23–31. PMID:

17185659.

12. Moher D, Liberati A, Tetzlaff J, Altman DG. the PRISMA statement. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009; 151:264–269. W64. PMID:

19622511.

13. Jones CM, Ashrafian H, Darzi A, Athanasiou T. Guidelines for diagnostic tests and diagnostic accuracy in surgical research. J Invest Surg. 2010; 23:57–65. PMID:

20233006.

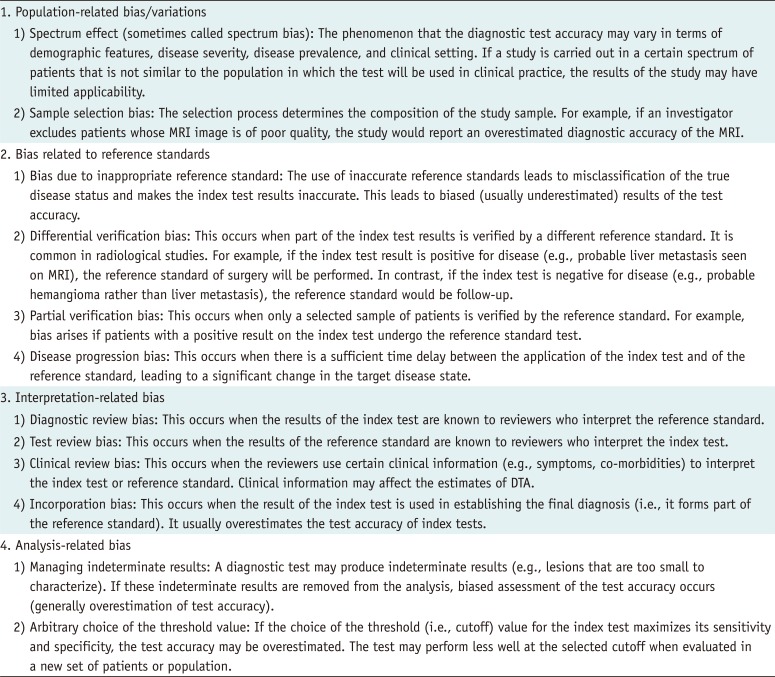

14. Whiting P, Rutjes AW, Reitsma JB, Glas AS, Bossuyt PM, Kleijnen J. Sources of variation and bias in studies of diagnostic accuracy: a systematic review. Ann Intern Med. 2004; 140:189–202. PMID:

14757617.

15. Dodd JD. Evidence-based practice in radiology: steps 3 and 4--appraise and apply diagnostic radiology literature. Radiology. 2007; 242:342–354. PMID:

17255406.

16. Obuchowski NA. Special Topics III: bias. Radiology. 2003; 229:617–621. PMID:

14593188.

17. Sica GT. Bias in research studies. Radiology. 2006; 238:780–789. PMID:

16505391.

18. Whiting P, Rutjes AW, Reitsma JB, Bossuyt PM, Kleijnen J. The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol. 2003; 3:25. PMID:

14606960.

19. Whiting PF, Rutjes AW, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, et al. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011; 155:529–536. PMID:

22007046.

20. Wade R, Corbett M, Eastwood A. Quality assessment of comparative diagnostic accuracy studies: our experience using a modified version of the QUADAS-2 tool. Res Synth Methods. 2013; 4:280–286. PMID:

26053845.

21. Leeflang MM, Deeks JJ, Gatsonis C, Bossuyt PM. Cochrane Diagnostic Test Accuracy Working Group. Systematic reviews of diagnostic test accuracy. Ann Intern Med. 2008; 149:889–897. PMID:

19075208.

22. Reitsma JB, Glas AS, Rutjes AW, Scholten RJ, Bossuyt PM, Zwinderman AH. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J Clin Epidemiol. 2005; 58:982–990. PMID:

16168343.

23. Halligan S, Altman DG. Evidence-based practice in radiology: steps 3 and 4--appraise and apply systematic reviews and meta-analyses. Radiology. 2007; 243:13–27. PMID:

17392245.

24. Deeks JJ. Systematic reviews in health care: systematic reviews of evaluations of diagnostic and screening tests. BMJ. 2001; 323:157–162. PMID:

11463691.

25. Devillé WL, Buntinx F, Bouter LM, Montori VM, de Vet HC, van der Windt DA, et al. Conducting systematic reviews of diagnostic studies: didactic guidelines. BMC Med Res Methodol. 2002; 2:9. PMID:

12097142.

26. Chappell FM, Raab GM, Wardlaw JM. When are summary ROC curves appropriate for diagnostic meta-analyses? Stat Med. 2009; 28:2653–2668. PMID:

19591118.

27. Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003; 327:557–560. PMID:

12958120.

28. Song F, Eastwood AJ, Gilbody S, Duley L, Sutton AJ. Publication and related biases. Health Technol Assess. 2000; 4:1–115.

29. Tatsioni A, Zarin DA, Aronson N, Samson DJ, Flamm CR, Schmid C, et al. Challenges in systematic reviews of diagnostic technologies. Ann Intern Med. 2005; 142(12 Pt 2):1048–1055. PMID:

15968029.

30. Mulherin SA, Miller WC. Spectrum bias or spectrum effect? Subgroup variation in diagnostic test evaluation. Ann Intern Med. 2002; 137:598–602. PMID:

12353947.

31. de Groot JA, Dendukuri N, Janssen KJ, Reitsma JB, Brophy J, Joseph L, et al. Adjusting for partial verification or workup bias in meta-analyses of diagnostic accuracy studies. Am J Epidemiol. 2012; 175:847–853. PMID:

22422923.

32. Leeflang MM, Moons KG, Reitsma JB, Zwinderman AH. Bias in sensitivity and specificity caused by data-driven selection of optimal cutoff values: mechanisms, magnitude, and solutions. Clin Chem. 2008; 54:729–737. PMID:

18258670.

33. Ewald B. Post hoc choice of cut points introduced bias to diagnostic research. J Clin Epidemiol. 2006; 59:798–801. PMID:

16828672.

34. Song F, Khan KS, Dinnes J, Sutton AJ. Asymmetric funnel plots and publication bias in meta-analyses of diagnostic accuracy. Int J Epidemiol. 2002; 31:88–95. PMID:

11914301.

35. Deeks JJ, Macaskill P, Irwig L. The performance of tests of publication bias and other sample size effects in systematic reviews of diagnostic test accuracy was assessed. J Clin Epidemiol. 2005; 58:882–893. PMID:

16085191.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download