1. Chen H, Qi X, Cheng JZ, Heng PA. Deep contextual networks for neuronal structure segmentation. In : Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence; 2016 Feb 12–17; Phoenix, AZ, USA. Palo Alto, CA, USA: Association for the Advancement of Artificial Intelligence;2016.

2. Rao M, Stough J, Chi YY, Muller K, Tracton G, Pizer SM, et al. Comparison of human and automatic segmentations of kidneys from CT images. Int J Radiat Oncol Biol Phys. 2005; 61(3):954–960. PMID:

15708280.

3. Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. In : Proceedings of MICCAI 2015: Medical Image Computing and Computer-Assisted Intervention; 2015 Oct 5–9; Munich, Germany. Berlin: Springer;2015. p. 234–241.

4. Noh H, Hong S, Han B. Learning deconvolution network for semantic segmentation. In : Proceedings of the 2015 IEEE International Conference on Computer Vision; 2015 Dec 7–13; Santiago, Chile. New York, NY, USA: IEEE;2015. p. 1520–1528.

5. Zheng S, Jayasumana S, Romera-Paredes B, Vineet V, Su Z, Du D, et al. Conditional random fields as recurrent neural networks. In : Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV); 2015 Dec 11–18; Las Condes, Chile. New York, NY, USA: IEEE;2015. p. 1529–1537.

6. Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell. 2017; 39(4):640–651. PMID:

27244717.

7. Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL. Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Pattern Anal Mach Intell. 2018; 40(4):834–848. PMID:

28463186.

8. Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 3D U-net: learning dense volumetric segmentation from sparse annotation. In : Proceedings of MICCAI 2016: Medical Image Computing and Computer-Assisted Intervention; 2016 Oct 17–21; Athens, Greece. Berlin: Springer;2016. p. 424–432.

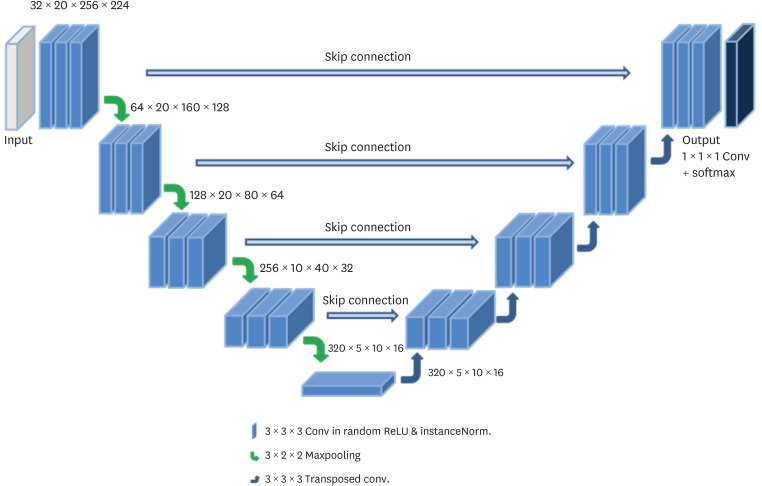

9. Isensee F, Jaeger PF, Kohl SAA, Petersen J, Maier-Hein KH. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods. 2021; 18(2):203–211. PMID:

33288961.

10. Christ PF, Elshaer ME, Ettlinger F, Tatavarty S, Bickel M, Bilic P, et al. Automatic liver and lesion segmentation in CT using cascaded fully convolutional neural networks and 3D conditional random fields. In : Proceedings of MICCAI 2016: Medical Image Computing and Computer-Assisted Intervention; 2016 Oct 17–21; Athens, Greece. Berlin: Springer;2016. p. 415–423.

11. Cui S, Mao L, Jiang J, Liu C, Xiong S. Automatic semantic segmentation of brain gliomas from MRI images using a deep cascaded neural network. J Healthc Eng. 2018; 2018:4940593. PMID:

29755716.

12. He Y, Carass A, Yun Y, Zhao C, Jedynak BM, Solomon SD, et al. Towards topological correct segmentation of macular OCT from cascaded FCNs. Fetal Infant Ophthalmic Med Image Anal (2017). 2017; 10554:202–209. PMID:

31355372.

13. Roth HR, Oda H, Zhou X, Shimizu N, Yang Y, Hayashi Y, et al. An application of cascaded 3D fully convolutional networks for medical image segmentation. Comput Med Imaging Graph. 2018; 66:90–99. PMID:

29573583.

14. Tang M, Zhang Z, Cobzas D, Jagersand M, Jaremko JL. Segmentation-by-detection: a cascade network for volumetric medical image segmentation. In : Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging; 2018 Apr 4–7; Washington, D.C., USA. New York, NY, USA: IEEE;2018. p. 1356–1359.

15. Kasarla T, Nagendar G, Hegde G, Balasubramanian VN, Jawahar CV. (Region-based active learning for efficient labeling in semantic segmentation. In : Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV); 2019 Jan 7–11; Waikoloa Village, HI, USA. New York, NY, USA: IEEE;2019.

16. Kim T, Lee KH, Ham S, Park B, Lee S, Hong D, et al. Active learning for accuracy enhancement of semantic segmentation with CNN-corrected label curations: evaluation on kidney segmentation in abdominal CT. Sci Rep. 2020; 10(1):366. PMID:

31941938.

17. Sourati J, Gholipour A, Dy JG, Kurugol S, Warfield SK. Active deep learning with fisher information for patch-wise semantic segmentation. Deep Learn Med Image Anal Multimodal Learn Clin Decis Support (2018). 2018; 11045:83–91. PMID:

30450490.

18. Wang G, Li W, Zuluaga MA, Pratt R, Patel PA, Aertsen M, et al. Interactive medical image segmentation using deep learning with image-specific fine tuning. IEEE Trans Med Imaging. 2018; 37(7):1562–1573. PMID:

29969407.

19. Wen S, Kurc TM, Hou L, Saltz JH, Gupta RR, Batiste R, et al. Comparison of different classifiers with active learning to support quality control in nucleus segmentation in pathology images. AMIA Jt Summits Transl Sci Proc. 2018; 2017:227–236. PMID:

29888078.

20. Yang L, Zhang Y, Chen J, Zhang S, Chen DZ. Suggestive annotation: a deep active learning framework for biomedical image segmentation. In : Proceedings of MICCAI 2017: Medical Image Computing and Computer-Assisted Intervention; 2017 Sep 11–13; Quebec City, QC, Canada. Berlin: Springer;2017. p. 399–407.

21. Penso M, Moccia S, Scafuri S, Muscogiuri G, Pontone G, Pepi M, et al. Automated left and right ventricular chamber segmentation in cardiac magnetic resonance images using dense fully convolutional neural network. Comput Methods Programs Biomed. 2021; 204:106059. PMID:

33812305.

22. Zhang X, Noga M, Martin DG, Punithakumar K. Fully automated left atrium segmentation from anatomical cine long-axis MRI sequences using deep convolutional neural network with unscented Kalman filter. Med Image Anal. 2021; 68:101916. PMID:

33285484.

24. Pham DL, Xu C, Prince JL. Current methods in medical image segmentation. Annu Rev Biomed Eng. 2000; 2(1):315–337. PMID:

11701515.

25. Zhang D, Meng D, Han J. Co-saliency detection via a self-paced multiple-instance learning framework. IEEE Trans Pattern Anal Mach Intell. 2017; 39(5):865–878. PMID:

27187947.

PDF

PDF Citation

Citation Print

Print

XML Download

XML Download