Abstract

Objectives

Methods

Results

Conclusions

Figures and Tables

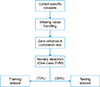

Figure 2

Flowchart of the predictive model building and best performing model selection. CQ: chi-square feature selection, WR: support vector machinebased wrapper feature selection, AUC: area under the curve, SP: specificity, SN: sensitivity, SMOTE: Synthetic Minority Over-sampling Technique.

Figure 3

Performance metric changes (%) with and without SMOTE balancing. AMI: acute myocardial infarction, CHF: congestive heart failure, COPD: chronic obstructive pulmonary disease, DB: type 2 diabetes, PN: pneumonia, SP: specificity, SN: sensitivity, KNN: k-nearest neighbor, LSVM: linear support vector machine, RF: random forest, NN: multi-layer neural network, SMOTE: Synthetic Minority Over-sampling Technique.

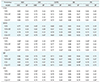

Table 1

Descriptive statistics for all common features

Values are presented as number (%) or mean ± standard deviation. For the binary (Yes or No) type variable, descriptive statistics is shown for “Yes” level only.

CHF: congestive heart failure, AMI: acute myocardial infarction, COPD: chronic obstructive pulmonary disease, PN: pneumonia, DB: type 2 diabetes, LOS: length of stay.

Table 4

Performance comparison of predictive models

AMI: acute myocardial infarction, CHF: congestive heart failure, COPD: chronic obstructive pulmonary disease, DB: type 2 diabetes, PN: pneumonia, AUC: area under the curve, SP: specificity, KNN: k-nearest neighbor, LSVM: linear support vector machine, RF: random forest, NN: multi-layer neural network, WR: support vector machine-based wrapper method, WR+ST: wrapper method with SMOTE (Synthetic Minority Over-sampling Technique), CQ: chi-square filtering method, CQ+ST: chi-square with SMOTE.

aBest model based on AUC, bbest model based on specificity.

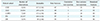

Table 5

Best performing model based on several criteria

AMI: acute myocardial infarction, CHF: congestive heart failure, COPD: chronic obstructive pulmonary disease, DB: type 2 diabetes, PN: pneumonia, AUC: area under the curve, SP: specificity, SN: sensitivity, LSVM: linear support vector machine, RF: random forest, NN: multi-layer neural network, KNN: k-nearest neighbor, WR: support vector machine-based wrapper method, WR+ST: wrapper method with SMOTE (Synthetic Minority Over-sampling Technique), CQ: chi-square filtering method, CQ+ST: chi-square with SMOTE.

Example, a+b+c; a = machine learning method, b = feature selection technique, c = presence of SMOTE balancing; LSVM+CQ+ST = linear support vector mechanics with chi-square feature selection and SMOTE date balancing technique.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download