The meetings of experts, such as task forces or standing committees for special issues often make important decisions, but decision-making systems can be costly and timeconsuming [123], and such systems cannot respond quickly. The rapid advancement of information technology (IT) services can help to overcome this difficulty.

In this paper, we propose a new system, called the Rapid Opinion Collecting System (ROCS), that utilizes public IT services from short message services (SMS) and e-mails to cloud services such as Google Forms [45]. The objective of this study was to demonstrate the efficiency of the ROCS by analyzing response rates and times.

This study was approved by the Institutional Review Board (IRB) of Asan Medical Center (No. 2014-0690). Informed consent requirement was waived by the IRB. The study was conducted from November 19, 2012 to December 30, 2012 (6 weeks). The study participants were 10 board-certified emergency physicians who worked in four different regional emergency centers.

Another board-certified emergency physician who was a professor of a general hospital was assigned as the moderator. All 20 cases were designed by analyzing emergency room medical records. Each case was anonymized by the removal of personal identifiers, such as patient identification number and name. Each case was given a random number. The moderator activated each case by random number. Sixteen cases were activated on weekdays and four cases on weekend days. Among the 16 weekday cases, four were activated in the evening (from 6 PM to 11 PM). When activating a case, the moderator sent an SMS via mobile phone and an email using Google Forms [45]. The overall procedure of the ROCS is explained in Figure 1. The receivers could answer the survey by simply replying to the e-mail or logging in to the Google Forms website. Google Forms summarized the survey results and response times automatically. Therefore, the time required for the analysis was shortened. The activation time was set by the time the e-mail was sent. A timely response was defined as one that was received within 2 hours from activation. During the study, the 10 participating physicians had no information on the study period, case activation time, the total number of activations, or the criteria for a timely response. IBM SPSS ver. 20 (IBM Corporation, Armonk, NY, USA) was used for all statistical analysis. A chi-square test, Fisher exact test, and a Mann-Whitney U-test were used. We considered p<0.05 as statistically significant.

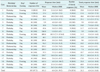

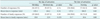

The ROCS can successfully collect professional opinions on a public health issue. Table 1 shows the details of the ROCS responses received in our study. The overall response rate was 97.5%, and the timely response rate was 87.0%. The response rates were high for all cases whether it was a weekend day or evening (Table 2). Only the timely responses in the evening were relatively low (75.0%, p=0.02), as shown in Table 2. Although a method for promoting timely responses in the evening is necessary, the feasibility of the ROCS for the collection of expert opinions in a relatively short time was demonstrated.

There have been few studies using online survey tools, such as Google Forms [678910]. As far as we know, this study is the first attempt to apply an online tool to the rapid collection of expert opinions on a public health issue. By using well-known IT services, such as SMS, e-mail, and Google Forms, the ROCS can collect experts' opinions in a relatively short time compared with traditional off-line meetings. Also, this system operates with almost zero cost and can give a prompt response on a public health issue. The proposed ROCS may be applied to various situations, such as bioterrorism attacks, disasters, and epidemic outbreak, which require an emergency response [1112131415].

This study had several limitations. We did not conduct a test from 11 PM to 8 AM. The responses during those times might have been lower than the results in this study. SMS and e-mails are one-way push services. Because there is no confirmation of receipt, transmission failure could have occurred. Also, there is a probability of quality dropping. Timely response was defined by authors on an arbitrary basis. All study participants were emergency physicians. Therefore, the results of the study might not be generalizable to professionals with standard working hours or days.

In conclusions, the potential of the ROCS was demonstrated; it showed a high response rate and a relatively short response time. The proposed system may help to overcome the limitations of the traditional off-line expert opinion aggregation model.

Figures and Tables

Acknowledgments

This work was supported by grants from the Ministry of Health and Welfare–Korea Centers for Disease Control & Prevention (Grant No. 2831-304-320-01).

References

1. World Health Organization. The World Health Report 2002. Geneva, Switzerland: World Health Organization;2002.

2. Sethi D. European report on child injury prevention. Copenhagen, Denmark: World Health Organization;2008.

3. Peden MM. World report on child injury prevention. Geneva, Switzerland: World Health Organization;2008.

4. Rehani MM, Berris T. International Atomic Energy Agency study with referring physicians on patient radiation exposure and its tracking: a prospective survey using a web-based questionnaire. BMJ Open. 2012; 2(5):e001425.

5. Google. Google Forms [Internet]. [place unknown]: Google;2015. cited at 2017 Apr 1. Available from: https://www.google.com/forms/about/.

6. de la Fuente Valentin L, Pardo A, Delgado Kloos C. Using third party services to adapt learning material: a case study with Google Forms. In : Cress U, Dimitrova V, Specht M, editors. Learning in the synergy of multiple disciplines. Heidelberg, Germany: Springer;2009. p. 744–750.

7. Rehani MM, Berris T. Radiation exposure tracking: survey of unique patient identification number in 40 countries. AJR Am J Roentgenol. 2013; 200(4):776–779.

8. Gao F. A case study of using a social annotation tool to support collaboratively learning. Internet High Educ. 2013; 17:76–83.

9. Reyna J. Google Docs in higher education settings: a preliminary report. In : Proceedings of World Conference on Educational Media and Technology; 2010 Jun 29; Toronto, Canada. p. 1566–1572.

10. Peacock JG, Grande JP. An online app platform enhances collaborative medical student group learning and classroom management. Med Teach. 2016; 38(2):174–180.

11. Irvin CB, Nouhan PP, Rice K. Syndromic analysis of computerized emergency department patients' chief complaints: an opportunity for bioterrorism and influenza surveillance. Ann Emerg Med. 2003; 41(4):447–452.

12. Bush LM, Abrams BH, Beall A, Johnson CC. Index case of fatal inhalational anthrax due to bioterrorism in the United States. N Engl J Med. 2001; 345(22):1607–1610.

13. Chretien JP, Tomich NE, Gaydos JC, Kelley PW. Real-time public health surveillance for emergency preparedness. Am J Public Health. 2009; 99(8):1360–1363.

PDF

PDF ePub

ePub Citation

Citation Print

Print

XML Download

XML Download